Posted by Ian Beer, Google Project Zero

This blog post is my analysis of a vulnerability found by @xerub. Phrack published @xerub's writeup so go check that out first.

As well as doing my own vulnerability research I also spend time trying as best as I can to keep up with the public state-of-the-art, especially when details of a particularly interesting vulnerability are announced or a new in-the-wild exploit is caught. Originally this post was just a series of notes I took last year as I was trying to understand this bug. But the bug itself and the narrative around it are so fascinating that I thought it would be worth writing up these notes into a more coherent form to share with the community.

Background

On April 14th 2021 the Washington Post published an article on the unlocking of the San Bernardino iPhone by Azimuth containing a nugget of non-public information:

"Azimuth specialized in finding significant vulnerabilities. Dowd [...] had found one in open-source code from Mozilla that Apple used to permit accessories to be plugged into an iPhone’s lightning port, according to the person."

There's not that much Mozilla code running on an iPhone and even less which is likely to be part of such an attack surface. Therefore, if accurate, this quote almost certainly meant that Azimuth had exploited a vulnerability in the ASN.1 parser used by Security.framework, which is a fork of Mozilla's NSS ASN.1 parser.

I searched around in bugzilla (Mozilla's issue tracker) looking for candidate vulnerabilities which matched the timeline discussed in the Post article and narrowed it down to a handful of plausible bugs including: 1202868, 1192028, 1245528.

I was surprised that there had been so many exploitable-looking issues in the ASN.1 code and decided to add auditing the NSS ASN.1 parser as an quarterly goal.

A month later, having predictably done absolutely nothing more towards that goal, I saw this tweet from @xerub:

@xerub: CVE-2021-30737 is pretty bad. Please update ASAP. (Shameless excerpt from the full chain source code) 4:00 PM - May 25, 2021

The shameless excerpt reads:

// This is the real deal. Take no chances, take no prisoners! I AM THE STATE MACHINE!

And CVE-2021-30737, fixed in iOS 14.6 was described in the iOS release notes as:

Impact: Processing a maliciously crafted certification may lead to arbitrary code execution

Description: A memory corruption issue in the ASN.1 decoder was addressed by removing the vulnerable code.

Feeling slightly annoyed that I hadn't acted on my instincts as there was clearly something awesome lurking there I made a mental note to diff the source code once Apple released it which they finally did a few weeks later on opensource.apple.com in the Security package.

Here's the diff between the MacOS 11.4 and 11.3 versions of secasn1d.c which contains the ASN.1 parser:

|

diff --git a/OSX/libsecurity_asn1/lib/secasn1d.c b/OSX/libsecurity_asn1/lib/secasn1d.c index f338527..5b4915a 100644 --- a/OSX/libsecurity_asn1/lib/secasn1d.c +++ b/OSX/libsecurity_asn1/lib/secasn1d.c @@ -434,9 +434,6 @@ loser: PORT_ArenaRelease(cx->our_pool, state->our_mark); state->our_mark = NULL; } - if (new_state != NULL) { - PORT_Free(new_state); - } return NULL; }

@@ -1794,19 +1791,13 @@ sec_asn1d_parse_bit_string (sec_asn1d_state *state, /*PORT_Assert (state->pending > 0); */ PORT_Assert (state->place == beforeBitString);

- if ((state->pending == 0) || (state->contents_length == 1)) { + if (state->pending == 0) { if (state->dest != NULL) { SecAsn1Item *item = (SecAsn1Item *)(state->dest); item->Data = NULL; item->Length = 0; state->place = beforeEndOfContents; - } - if(state->contents_length == 1) { - /* skip over (unused) remainder byte */ - return 1; - } - else { - return 0; + return 0; } } |

The first change (removing the PORT_Free) is immaterial for Apple's use case as it's fixing a double free which doesn't impact Apple's build. It's only relevant when "allocator marks" are enabled and this feature is disabled.

The vulnerability must therefore be in sec_asn1d_parse_bit_string. We know from xerub's tweet that something goes wrong with a state machine, but to figure it out we need to cover some ASN.1 basics and then start looking at how the NSS ASN.1 state machine works.

ASN.1 encoding

ASN.1 is a Type-Length-Value serialization format, but with the neat quirk that it can also handle the case when you don't know the length of the value, but want to serialize it anyway! That quirk is only possible when ASN.1 is encoded according to Basic Encoding Rules (BER.) There is a stricter encoding called DER (Distinguished Encoding Rules) which enforces that a particular value only has a single correct encoding and disallows the cases where you can serialize values without knowing their eventual lengths.

This page is a nice beginner's guide to ASN.1. I'd really recommend skimming that to get a good overview of ASN.1.

There are a lot of built-in types in ASN.1. I'm only going to describe the minimum required to understand this vulnerability (mostly because I don't know any more than that!) So let's just start from the very first byte of a serialized ASN.1 object and figure out how to decode it:

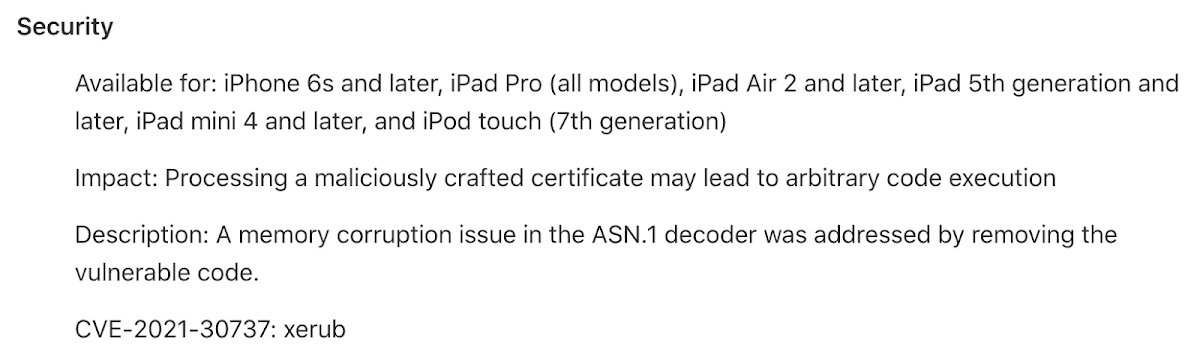

This first byte tells you the type, with the least significant 5 bits defining the type identifier. The special type identifier value of 0x1f tells you that the type identifier doesn't fit in those 5 bits and is instead encoded in a different way (which we'll ignore):

Diagram showing first two bytes of a serialized ASN.1 object. The first byte in this case is the type and class identifier and the second is the length.

The upper two bits of the first byte tell you the class of the type: universal, application, content-specific or private. For us, we'll leave that as 0 (universal.)

Bit 6 is where the fun starts. A value of 1 tells us that this is a primitive encoding which means that following the length are content bytes which can be directly interpreted as the intended type. For example, a primitive encoding of the string "HELLO" as an ASN.1 printable string would have a length byte of 5 followed by the ASCII characters "HELLO". All fairly straightforward.

A value of 0 for bit 6 however tells us that this is a constructed encoding. This means that the bytes following the length are not the "raw" content bytes for the type but are instead ASN.1 encodings of one or more "chunks" which need to be individually parsed and concatenated to form the final output value. And to make things extra complicated it's also possible to specify a length value of 0 which means that you don't even know how long the reconstructed output will be or how much of the subsequent input will be required to completely build the output.

This final case (of a constructed type with indefinite length) is known as indefinite form. The end of the input which makes up a single indefinite value is signaled by a serialized type with the identifier, constructed, class and length values all equal to 0 , which is encoded as two NULL bytes.

ASN.1 bitstrings

Most of the ASN.1 string types require no special treatment; they're just buffers of raw bytes. Some of them have length restrictions. For example: a BMP string must have an even length and a UNIVERSAL string must be a multiple of 4 bytes in length, but that's about it.

ASN.1 bitstrings are strings of bits as opposed to bytes. You could for example have a bitstring with a length of a single bit (so either a 0 or 1) or a bitstring with a length of 127 bits (so 15 full bytes plus an extra 7 bits.)

Encoded ASN.1 bitstrings have an extra metadata byte after the length but before the contents, which encodes the number of unused bits in the final byte.

Diagram showing the complete encoding of a 3-bit bitstring. The length of 2 includes the unused-bits count byte which has a value of 5, indicating that only the 3 most-significant bits of the final byte are valid.

Parsing ASN.1

ASN.1 data always needs to be decoded in tandem with a template that tells the parser what data to expect and also provides output pointers to be filled in with the parsed output data. Here's the template my test program uses to exercise the bitstring code:

|

const SecAsn1Template simple_bitstring_template[] = { { SEC_ASN1_BIT_STRING | SEC_ASN1_MAY_STREAM, // kind: bit string, // may be constructed 0, // offset: in dest/src NULL, // sub: subtemplate for indirection sizeof(SecAsn1Item) // size: of output structure } }; |

A SecASN1Item is a very simple wrapper around a buffer. We can provide a SecAsn1Item for the parser to use to return the parsed bitstring then call the parser:

|

SecAsn1Item decoded = {0};

PLArenaPool* pool = PORT_NewArena(1024);

SECStatus status = SEC_ASN1Decode(pool, // pool: arena for destination allocations &decoded, // dest: decoded encoded items in to here &simple_bitstring_template, // template asn1_bytes, // buf: asn1 input bytes asn1_bytes_len); // len: input size |

NSS ASN.1 state machine

The state machine has two core data structures:

SEC_ASN1DecoderContext - the overall parsing context

sec_asn1d_state - a single parser state, kept in a doubly-linked list forming a stack of nested states

Here's a trimmed version of the state object showing the relevant fields:

|

typedef struct sec_asn1d_state_struct { SEC_ASN1DecoderContext *top; const SecAsn1Template *theTemplate; void *dest;

struct sec_asn1d_state_struct *parent; struct sec_asn1d_state_struct *child;

sec_asn1d_parse_place place;

unsigned long contents_length; unsigned long pending; unsigned long consumed;

int depth; } sec_asn1d_state; |

The main engine of the parsing state machine is the method SEC_ASN1DecoderUpdate which takes a context object, raw input buffer and length:

|

SECStatus SEC_ASN1DecoderUpdate (SEC_ASN1DecoderContext *cx, const char *buf, size_t len) |

The current state is stored in the context object's current field, and that current state's place field determines the current state which the parser is in. Those states are defined here:

|

typedef enum { beforeIdentifier, duringIdentifier, afterIdentifier, beforeLength, duringLength, afterLength, beforeBitString, duringBitString, duringConstructedString, duringGroup, duringLeaf, duringSaveEncoding, duringSequence, afterConstructedString, afterGroup, afterExplicit, afterImplicit, afterInline, afterPointer, afterSaveEncoding, beforeEndOfContents, duringEndOfContents, afterEndOfContents, beforeChoice, duringChoice, afterChoice, notInUse } sec_asn1d_parse_place; |

The state machine loop switches on the place field to determine which method to call:

|

switch (state->place) { case beforeIdentifier: consumed = sec_asn1d_parse_identifier (state, buf, len); what = SEC_ASN1_Identifier; break; case duringIdentifier: consumed = sec_asn1d_parse_more_identifier (state, buf, len); what = SEC_ASN1_Identifier; break; case afterIdentifier: sec_asn1d_confirm_identifier (state); break; ... |

Each state method which could consume input is passed a pointer (buf) to the next unconsumed byte in the raw input buffer and a count of the remaining unconsumed bytes (len).

It's then up to each of those methods to return how much of the input they consumed, and signal any errors by updating the context object's status field.

The parser can be recursive: a state can set its ->place field to a state which expects to handle a parsed child state and then allocate a new child state. For example when parsing an ASN.1 sequence:

|

state->place = duringSequence; state = sec_asn1d_push_state (state->top, state->theTemplate + 1, state->dest, PR_TRUE); |

The current state sets its own next state to duringSequence then calls sec_asn1d_push_state which allocates a new state object, with a new template and a copy of the parent's dest field.

sec_asn1d_push_state updates the context's current field such that the next loop around SEC_ASN1DecoderUpdate will see this child state as the current state:

|

cx->current = new_state; |

Note that the initial value of the place field (which determines the current state) of the newly allocated child is determined by the template. The final state in the state machine path followed by that child will then be responsible for popping itself off the state stack such that the duringSequence state can be reached by its parent to consume the results of the child.

Buffer management

The buffer management is where the NSS ASN.1 parser starts to get really mind bending. If you read through the code you will notice an extreme lack of bounds checks when the output buffers are being filled in - there basically are none. For example, sec_asn1d_parse_leaf which copies the raw encoded string bytes for example simply memcpy's into the output buffer with no bounds checks that the length of the string matches the size of the buffer.

Rather than using explicit bounds checks to ensure lengths are valid, the memory safety is instead supposed to be achieved by relying on the fact that decoding valid ASN.1 can never produce output which is larger than its input.

That is, there are no forms of decompression or input expansion so any parsed output data must be equal to or shorter in length than the input which encoded it. NSS leverages this and over-allocates all output buffers to simply be as large as their inputs.

For primitive strings this is quite simple: the length and input are provided so there's nothing really to go that wrong. But for constructed strings this gets a little fiddly...

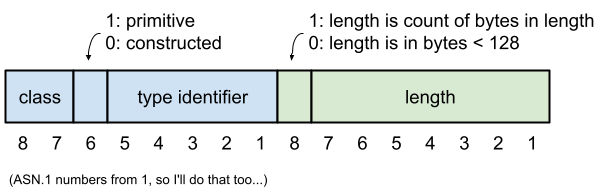

One way to think of constructed strings is as trees of substrings, nested up to 32-levels deep. Here's an example:

An outer constructed definite length string with three children: a primitive string "abc", a constructed indefinite length string and a primitive string "ghi". The constructed indefinite string has two children, a primitive string "def" and an end-of-contents marker.

We start with a constructed definite length string. The string's length value L is the complete size of the remaining input which makes up this string; that number of input bytes should be parsed as substrings and concatenated to form the parsed output.

At this point the NSS ASN.1 string parser allocates the output buffer for the parsed output string using the length L of that first input string. This buffer is an over-allocated worst case. The part which makes it really fun though is that NSS allocates the output buffer then promptly throws away that length! This might not be so obvious from quickly glancing through the code though. The buffer which is allocated is stored as the Data field of a buffer wrapper type:

|

typedef struct cssm_data { size_t Length; uint8_t * __nullable Data; } SecAsn1Item, SecAsn1Oid; |

(Recall that we passed in a pointer to a SecAsn1Item in the template; it's the Data field of that which gets filled in with the allocated string buffer pointer here. This type is very slightly different between NSS and Apple's fork, but the difference doesn't matter here.)

That Length field is not the size of the allocated Data buffer. It's a (type-specific) count which determines how many bits or bytes of the buffer pointed to by Data are valid. I say type-specific because for bit-strings Length is stored in units of bits but for other strings it's in units of bytes. (CVE-2016-1950 was a bug in NSS where the code mixed up those units.)

Rather than storing the allocated buffer size along with the buffer pointer, each time a substring/child string is encountered the parser walks back up the stack of currently-being-parsed states to find the inner-most definite length string. As it's walking up the states it examines each state to determine how much of its input it has consumed in order to be able to determine whether it's the case that the current to-be-parsed substring is indeed completely enclosed within the inner-most enclosing definite length string.

If that sounds complicated, it is! The logic which does this is here, and it took me a good few days to pull it apart enough to figure out what this was doing:

|

sec_asn1d_state *parent = sec_asn1d_get_enclosing_construct(state); while (parent && parent->indefinite) { parent = sec_asn1d_get_enclosing_construct(parent); }

unsigned long remaining = parent->pending; parent = state; do { if (!sec_asn1d_check_and_subtract_length(&remaining, parent->consumed, state->top) || /* If parent->indefinite is true, parent->contents_length is * zero and this is a no-op. */ !sec_asn1d_check_and_subtract_length(&remaining, parent->contents_length, state->top) || /* If parent->indefinite is true, then ensure there is enough * space for an EOC tag of 2 bytes. */ ( parent->indefinite && !sec_asn1d_check_and_subtract_length(&remaining, 2, state->top) ) ) { /* This element is larger than its enclosing element, which is * invalid. */ return; } } while ((parent = sec_asn1d_get_enclosing_construct(parent)) && parent->indefinite); |

It first walks up the state stack to find the innermost constructed definite state and uses its state->pending value as an upper bound. It then walks the state stack again and for each in-between state subtracts from that original value of pending how many bytes could have been consumed by those in between states. It's pretty clear that the pending value is therefore vitally important; it's used to determine an upper bound so if we could mess with it this "bounds check" could go wrong.

After figuring out that this was pretty clearly the only place where any kind of bounds checking takes place I looked back at the fix more closely.

We know that sec_asn1d_parse_bit_string is only the function which changed:

|

static unsigned long sec_asn1d_parse_bit_string (sec_asn1d_state *state, const char *buf, unsigned long len) { unsigned char byte;

/*PORT_Assert (state->pending > 0); */ PORT_Assert (state->place == beforeBitString);

if ((state->pending == 0) || (state->contents_length == 1)) { if (state->dest != NULL) { SecAsn1Item *item = (SecAsn1Item *)(state->dest); item->Data = NULL; item->Length = 0; state->place = beforeEndOfContents; } if(state->contents_length == 1) { /* skip over (unused) remainder byte */ return 1; } else { return 0; } }

if (len == 0) { state->top->status = needBytes; return 0; }

byte = (unsigned char) *buf; if (byte > 7) { dprintf("decodeError: parse_bit_string remainder oflow\n"); PORT_SetError (SEC_ERROR_BAD_DER); state->top->status = decodeError; return 0; }

state->bit_string_unused_bits = byte; state->place = duringBitString; state->pending -= 1;

return 1; } |

The highlighted region of the function are the characters which were removed by the patch. This function is meant to return the number of input bytes (pointed to by buf) which it consumed and my initial hunch was to notice that the patch removed a path through this function where you could get the count of input bytes consumed and pending out-of-sync. It should be the case that when they return 1 in the removed code they also decrement state->pending, as they do in the other place where this function returns 1.

I spent quite a while trying to figure out how you could actually turn that into something useful but in the end I don't think you can.

So what else is going on here?

This state is reached with buf pointing to the first byte after the length value of a primitive bitstring. state->contents_length is the value of that parsed length. Bitstrings, as discussed earlier, are a unique ASN.1 string type in that they have an extra meta-data byte at the beginning (the unused-bits count byte.) It's perfectly fine to have a definite zero-length string - indeed that's (sort-of) handled earlier than this in the prepareForContents state, which short-circuits straight to afterEndOfContents:

|

if (state->contents_length == 0 && (! state->indefinite)) { /* * A zero-length simple or constructed string; we are done. */ state->place = afterEndOfContents; |

Here they're detecting a definite-length string type with a content length of 0. But this doesn't handle the edge case of a bitstring which consists only of the unused-bits count byte. The state->contents_length value of that bitstring will be 1, but it doesn't actually have any "contents".

It's this case which the (state->contents_length == 1) conditional in sec_asn1d_parse_bit_string matches:

|

if ((state->pending == 0) || (state->contents_length == 1)) { if (state->dest != NULL) { SecAsn1Item *item = (SecAsn1Item *)(state->dest); item->Data = NULL; item->Length = 0; state->place = beforeEndOfContents; } if(state->contents_length == 1) { /* skip over (unused) remainder byte */ return 1; } else { return 0; } } |

By setting state->place to beforeEndOfContents they are again trying to short-circuit the state machine to skip ahead to the state after the string contents have been consumed. But here they take an additional step which they didn't take when trying to achieve exactly the same thing in prepareForContents. In addition to updating state->place they also NULL out the dest SecAsn1Item's Data field and set the Length to 0.

I mentioned earlier that the new child states which are allocated to recursively parse the sub-strings of constructed strings get a copy of the parent's dest field (which is a pointer to a pointer to the output buffer.) This makes sense: that output buffer is only allocated once then gets recursively filled-in in a linear fashion by the children. (Technically this isn't actually how it works if the outermost string is indefinite length, there's separate handling for that case which instead builds a linked-list of substrings which are eventually concatenated, see sec_asn1d_concat_substrings.)

If the output buffer is only allocated once, what happens if you set Data to NULL like they do here? Taking a step back, does that actually make any sense at all?

No, I don't think it makes any sense. Setting Data to NULL at this point should at the very least cause a memory leak, as it's the only pointer to the output buffer.

The fun part though is that that's not the only consequence of NULLing out that pointer. item->Data is used to signal something else.

Here's a snippet from prepare_for_contents when it's determining whether there's enough space in the output buffer for this substring

|

} else if (state->substring) { /* * If we are a substring of a constructed string, then we may * not have to allocate anything (because our parent, the * actual constructed string, did it for us). If we are a * substring and we *do* have to allocate, that means our * parent is an indefinite-length, so we allocate from our pool; * later our parent will copy our string into the aggregated * whole and free our pool allocation. */ if (item->Data == NULL) { PORT_Assert (item->Length == 0); poolp = state->top->our_pool; } else { alloc_len = 0; } |

As the comment implies, if both item->Data is NULL at this point and state->substring is true, then (they believe) it must be the case that they are currently parsing a substring of an outer-level indefinite string, which has no definite-sized buffer already allocated. In that case the meaning of the item->Data pointer is different to that which we describe earlier: it's merely a temporary buffer meant to hold only this substring. Just above here alloc_len was set to the content length of this substring; and for the outer-definite-length case it's vitally important that alloc_len then gets set to 0 here (which is really indicating that a buffer has already been allocated and they must not allocate a new one.)

To emphasize the potentially subtle point: the issue is that using this conjunction (state->substring && !item->Data) for determining whether this a substring of a definite length or outer-level-indefinite string is not the same as the method used by the convoluted bounds checking code we saw earlier. That method walks up the current state stack and checks the indefinite bits of the super-strings to determine whether they're processing a substring of an outer-level-indefinite string.

Putting that all together, you might be able to see where this is going... (but it is still pretty subtle.)

Assume that we have an outer definite-length constructed bitstring with three primitive bitstrings as substrings:

Upon encountering the first outer-most definite length constructed bitstring, the code will allocate a fixed-size buffer, large enough to store all the remaining input which makes up this string, which in this case is 42 bytes. At this point dest->Data points to that buffer.

They then allocate a child state, which gets a copy of the dest pointer (not a copy of the dest SecAsn1Item object; a copy of a pointer to it), and proceed to parse the first child substring.

This is a primitive bitstring with a length of 1 which triggers the vulnerable path in sec_asn1d_parse_bit_string and sets dest->Data to NULL. The state machine skips ahead to beforeEndOfContents then eventually the next substring gets parsed - this time with dest->Data == NULL.

Now the logic goes wrong in a bad way and, as we saw in the snippet above, a new dest->Data buffer gets allocated which is the size of only this substring (2 bytes) when in fact dest->Data should already point to a buffer large enough to hold the entire outer-level-indefinite input string. This bitstring's contents then get parsed and copied into that buffer.

Now we come to the third substring. dest->Data is no longer NULL; but the code now has no way of determining that the buffer was in fact only (erroneously) allocated to hold a single substring. It believes the invariant that item->Data only gets allocated once, when the first outer-level definite length string is encountered, and it's that fact alone which it uses to determine whether dest->Data points to a buffer large enough to have this substring appended to it. It then happily appends this third substring, writing outside the bounds of the buffer allocated to store only the second substring.

This gives you a great memory corruption primitive: you can cause allocations of a controlled size and then overflow them with an arbitrary number of arbitrary bytes.

Here's an example encoding for an ASN.1 bitstring which triggers this issue:

|

uint8_t concat_bitstrings_constructed_definite_with_zero_len_realloc[] = {ASN1_CLASS_UNIVERSAL | ASN1_CONSTRUCTED | ASN1_BIT_STRING, // (0x23) 0x4a, // initial allocation size ASN1_CLASS_UNIVERSAL | ASN1_PRIMITIVE | ASN1_BIT_STRING, 0x1, // force item->Data = NULL 0x0, // number of unused bits in the final byte ASN1_CLASS_UNIVERSAL | ASN1_PRIMITIVE | ASN1_BIT_STRING, 0x2, // this is the reallocation size 0x0, // number of unused bits in the final byte 0xff, // only byte of bitstring ASN1_CLASS_UNIVERSAL | ASN1_PRIMITIVE | ASN1_BIT_STRING, 0x41, // 64 actual bytes, plus the remainder, will cause 0x40 byte memcpy one byte in to 2 byte allocation 0x0, // number of unused bits in the final byte 0xff, 0xff,// -- continues for overflow |

Why wasn't this found by fuzzing?

This is a reasonable question to ask. This source code is really really hard to audit, even with the diff it was at least a week of work to figure out the true root cause of the bug. I'm not sure if I would have spotted this issue during a code audit. It's very broken but it's quite subtle and you have to figure out a lot about the state machine and the bounds-checking rules to see it - I think I might have given up before I figured it out and gone to look for something easier.

But the trigger test-case is neither structurally complex nor large, and feels within-grasp for a fuzzer. So why wasn't it found? I'll offer two points for discussion:

Perhaps it's not being fuzzed?

Or at least, it's not being fuzzed in the exact form which it appears in Apple's Security.framework library. I understand that both Mozilla and Google do fuzz the NSS ASN.1 parser and have found a bunch of vulnerabilities, but note that the key part of the vulnerable code ("|| (state->contents_length == 1" in sec_asn1d_parse_bit_string) isn't present in upstream NSS (more on that below.)

Can it be fuzzed effectively?

Even if you did build the Security.framework version of the code and used a coverage guided fuzzer, you might well not trigger any crashes. The code uses a custom heap allocator and you'd have to either replace that with direct calls to the system allocator or use ASAN's custom allocator hooks. Note that upstream NSS does do that, but as I understand it, Apple's fork doesn't.

History

I'm always interested in not just understanding how a vulnerability works but how it was introduced. This case is a particularly compelling example because once you understand the bug, the code construct initially looks extremely suspicious. It only exists in Apple's fork of NSS and the only impact of that change is to introduce a perfect memory corruption primitive. But let's go through the history of the code to convince ourselves that it is much more likely that it was just an unfortunate accident:

The earliest reference to this code I can find is this, which appears to be the initial checkin in the Mozilla CVS repo on March 31, 2000:

|

static unsigned long sec_asn1d_parse_bit_string (sec_asn1d_state *state, const char *buf, unsigned long len) { unsigned char byte;

PORT_Assert (state->pending > 0); PORT_Assert (state->place == beforeBitString);

if (len == 0) { state->top->status = needBytes; return 0; }

byte = (unsigned char) *buf; if (byte > 7) { PORT_SetError (SEC_ERROR_BAD_DER); state->top->status = decodeError; return 0; }

state->bit_string_unused_bits = byte; state->place = duringBitString; state->pending -= 1;

return 1; } |

On August 24th, 2001 the form of the code changed to something like the current version, in this commit with the message "Memory leak fixes.":

|

static unsigned long sec_asn1d_parse_bit_string (sec_asn1d_state *state, const char *buf, unsigned long len) { unsigned char byte;

- PORT_Assert (state->pending > 0); /*PORT_Assert (state->pending > 0); */ PORT_Assert (state->place == beforeBitString);

+ if (state->pending == 0) { + if (state->dest != NULL) { + SECItem *item = (SECItem *)(state->dest); + item->data = NULL; + item->len = 0; + state->place = beforeEndOfContents; + return 0; + } + }

if (len == 0) { state->top->status = needBytes; return 0; }

byte = (unsigned char) *buf; if (byte > 7) { PORT_SetError (SEC_ERROR_BAD_DER); state->top->status = decodeError; return 0; }

state->bit_string_unused_bits = byte; state->place = duringBitString; state->pending -= 1;

return 1; } |

This commit added the item->data = NULL line but here it's only reachable when pending == 0. I am fairly convinced that this was dead code and not actually reachable (and that the PORT_Assert which they commented out was actually valid.)

The beforeBitString state (which leads to the sec_asn1d_parse_bit_string method being called) will always be preceded by the afterLength state (implemented by sec_asn1d_prepare_for_contents.) On entry to the afterLength state state->contents_length is equal to the parsed length field and sec_asn1d_prepare_for_contents does:

state->pending = state->contents_length;

So in order to reach sec_asn1d_parse_bit_string with state->pending == 0, state->contents_length would also need to be 0 in sec_asn1d_prepare_for_contents.

That means that in the if/else decision tree below, at least one of the two conditionals must be true:

|

if (state->contents_length == 0 && (! state->indefinite)) { /* * A zero-length simple or constructed string; we are done. */ state->place = afterEndOfContents; ... } else if (state->indefinite) { /* * An indefinite-length string *must* be constructed! */ dprintf("decodeError: prepare for contents indefinite not construncted\n"); PORT_SetError (SEC_ERROR_BAD_DER); state->top->status = decodeError; |

yet it is required that neither of those be true in order to reach the final else which is the only path to reaching sec_asn1d_parse_bit_string via the beforeBitString state:

|

} else { /* * A non-zero-length simple string. */ if (state->underlying_kind == SEC_ASN1_BIT_STRING) state->place = beforeBitString; else state->place = duringLeaf; } |

So at that point (24 August 2001) the NSS codebase had some dead code which looked like it was trying to handle parsing an ASN.1 bitstring which didn't have an unused-bits byte. As we've seen in the rest of this post though, that handling is quite wrong, but it didn't matter as the code was unreachable.

The earliest reference to Apple's fork of that NSS code I can find is in the SecurityNssAsn1-11 package for OS X 10.3 (Panther) which would have been released October 24th, 2003. In that project we can find a CHANGES.apple file which tells us a little more about the origins of Apple's fork:

General Notes

-------------

1. This module, SecurityNssAsn1, is based on the Netscape Security

Services ("NSS") portion of the Mozilla Browser project. The

source upon which SecurityNssAsn1 was based was pulled from

the Mozilla CVS repository, top of tree as of January 21, 2003.

The SecurityNssAsn1 project contains only those portions of NSS

used to perform BER encoding and decoding, along with minimal

support required by the encode/decode routines.

2. The directory structure of SecurityNssAsn1 differs significantly

from that of NSS, rendering simple diffs to document changes

unwieldy. Diffs could still be performed on a file-by-file basis.

3. All Apple changes are flagged by the symbol __APPLE__, either

via "#ifdef __APPLE__" or in a comment.

That document continues on to outline a number of broad changes which Apple made to the code, including reformatting the code and changing a number of APIs to add new features. We also learn the date at which Apple forked the code (January 21, 2003) so we can go back through a github mirror of the mozilla CVS repository to find the version of secasn1d.c as it would have appeared then and diff them.

From that diff we can see that the Apple developers actually made fairly significant changes in this initial import, indicating that this code underwent some level of review prior to importing it. For example:

|

@@ -1584,7 +1692,15 @@ /* * If our child was just our end-of-contents octets, we are done. */ + #ifdef __APPLE__ + /* + * Without the check for !child->indefinite, this path could + * be taken erroneously if the child is indefinite! + */ + if(child->endofcontents && !child->indefinite) { + #else if (child->endofcontents) { |

They were pretty clearly looking for potential correctness issues with the code while they were refactoring it. The example shown above is a non-trivial change and one which persists to this day. (And I have no idea whether the NSS or Apple version is correct!) Reading the diff we can see that not every change ended up being marked with #ifdef __APPLE__ or a comment. They also made this change to sec_asn1d_parse_bit_string:

|

@@ -1372,26 +1469,33 @@ /*PORT_Assert (state->pending > 0); */ PORT_Assert (state->place == beforeBitString);

- if (state->pending == 0) { - if (state->dest != NULL) { - SECItem *item = (SECItem *)(state->dest); - item->data = NULL; - item->len = 0; - state->place = beforeEndOfContents; - return 0; - } + if ((state->pending == 0) || (state->contents_length == 1)) { + if (state->dest != NULL) { + SECItem *item = (SECItem *)(state->dest); + item->Data = NULL; + item->Length = 0; + state->place = beforeEndOfContents; + } + if(state->contents_length == 1) { + /* skip over (unused) remainder byte */ + return 1; + } + else { + return 0; + } } |

In the context of all the other changes in this initial import this change looks much less suspicious than I first thought. My guess is that the Apple developers thought that Mozilla had missed handling the case of a bitstring with only the unused-bits bytes and attempted to add support for it. It looks like the state->pending == 0 conditional must have been Mozilla's check for handling a 0-length bitstring so therefore it was quite reasonable to think that the way it was handling that case by NULLing out item->data was the right thing to do, so it must also be correct to add the contents_length == 1 case here.

In reality the contents_length == 1 case was handled perfectly correctly anyway in sec_asn1d_parse_more_bit_string, but it wasn't unreasonable to assume that it had been overlooked based on what looked like a special case handling for the missing unused-bits byte in sec_asn1d_parse_bit_string.

The fix for the bug was simply to revert the change made during the initial import 18 years ago, making the dangerous but unreachable code unreachable once more:

|

if ((state->pending == 0) || (state->contents_length == 1)) { if (state->dest != NULL) { SecAsn1Item *item = (SecAsn1Item *)(state->dest); item->Data = NULL; item->Length = 0; state->place = beforeEndOfContents; } if(state->contents_length == 1) { /* skip over (unused) remainder byte */ return 1; } else { return 0; } } |

Conclusions

Forking complicated code is complicated. In this case it took almost two decades to in the end just revert a change made during import. Even verifying whether this revert is correct is really hard.

The Mozilla and Apple codebases have continued to diverge since 2003. As I discovered slightly too late to be useful, the Mozilla code now has more comments trying to explain the decoder's "novel" memory safety approach.

Rewriting this code to be more understandable (and maybe even memory safe) is also distinctly non-trivial. The code doesn't just implement ASN.1 decoding; it also has to support safely decoding incorrectly encoded data, as described by this verbatim comment for example:

/*

* Okay, this is a hack. It *should* be an error whether

* pending is too big or too small, but it turns out that

* we had a bug in our *old* DER encoder that ended up

* counting an explicit header twice in the case where

* the underlying type was an ANY. So, because we cannot

* prevent receiving these (our own certificate server can

* send them to us), we need to be lenient and accept them.

* To do so, we need to pretend as if we read all of the

* bytes that the header said we would find, even though

* we actually came up short.

*/

Verifying that a rewritten, simpler decoder also handles every hard-coded edge case correctly probably leads to it not being so simple after all.

No comments:

Post a Comment