Posted by Mateusz Jurczyk, Project Zero

This post is the second of a multi-part series capturing my journey from discovering a vulnerable little-known Samsung image codec, to completing a remote zero-click MMS attack that worked on the latest Samsung flagship devices. New posts will be published as they are completed and will be linked here when complete.

- [this post]

Introduction

In Part 1, I discussed how I discovered the "Qmage" image format natively supported on all modern Samsung phones, and how I traced its roots to Android boot animations and even some pre-Android phones. At this stage of the story, we also know that the codec seems very fragile and is likely affected by bugs, and that it constitutes a zero-click remote attack surface via MMS and the default Samsung Messages app. I was at this point of the project in early December 2019. The next logical step was to thoroughly fuzz it – the code was definitely too extensive and complex to approach with a manual audit, especially without access to the original source or expertise of the inner workings of the format. As a big fan of fuzzing, I hoped to be able to run it in accordance with the current state of the art: efficiently (without unnecessary overhead), at scale, with code coverage information, reliable reproducibility and effective deduplication. But how to achieve all this with a codec that is part of Android, accessible only through Skia image API, and precompiled for the ARM/ARM64 architectures only? Read on to find out!

Writing the test harness

The fuzzing harness is usually one of the most critical pieces of a successful fuzzing session, and it was the first thing I started working on. I published the end result of my work as SkCodecFuzzer on GitHub, and it can be used as a reference while reading this post. My initial goal with the loader was to write a Linux command-line program that could run on physical Android devices, and use the Skia SkCodec interface to load and decode an input image file in exactly the same way (or at least as closely as possible) as the internal Android doDecode function does it. This turned out to be surprisingly easy: if we ignore some largely irrelevant portions of doDecode, such as interactions with the JNI (Java Native Interface), NinePatch related code and scaling, we are left with just a handful of simple method calls. Accordingly, the ProcessImage() function in my harness is less than 100 lines of code. In order to build such an initial version of the loader, I used the Android NDK toolset, included several header files from the Skia source code, and linked it with the libhwui.so library from the target operating system. After copying the executable and an example Qmage file (let's stick with accessibility_light_easy_off.qmg from Part 1) to my test phone, I could test that it worked:

It's worth noting that the harness I used for my fuzzing had one extra check, verifying that the input file started with a QM or QG signature. This was necessary to make sure that the coverage-guided fuzzing wouldn't diverge towards other image formats supported by Skia, and only Qmage-related code would remain tested. There is also a slight difference between Android's code and my harness in the specific heap allocation class used (SkBitmap::HeapAllocator vs a selection of possible classes), but that shouldn't matter in any practical way.

Having such a loader run on Android is great, but it doesn't scale very well and my fuzzing tooling is much better on x86 too, so I was very tempted to get it running on the Intel architecture. One solution would be to try and run the same aarch64 ELF in an emulator such as qemu-aarch64. To make this work, we have to make sure that all potential dependencies of the harness are accessible on the host's file system, by pulling the full /system/lib64 directory, the /system/bin/linker64 file, and perhaps further directories such as /apex/com.android.runtime/lib64 from the research phone to our PC. Once we have that, we can try executing the loader under qemu:

If $ANDROID_PATH above points to a directory with Android 9 system files, it works! This is great news as it means that there aren't any fundamental blockers to running emulated Android user-mode components on a x86-64 host. With Android 10 system files, there was one minor issue with an abort thrown by libclang_rt.ubsan_standalone-aarch64-android.so:

By looking at the underlying code, it would seem that an UBSAN_OPTIONS=decorate_proc_maps=0 environment variable should fix the problem, but it didn't, and I didn't investigate further. Instead, I swapped the library with its older copy from Android 9, and the harness correctly worked again.

So, we can now run the Qmage codec on a typical Intel workstation, but one question remains – what is the performance? Software emulation such as qemu's is known to introduce visible overhead as compared to native execution speed. Let's quickly compare the run time of the loader on a Samsung device and in qemu, against the accessibility_light_easy_off.qmg sample:

and:

Based on this simple test, there seems to be a ~3x slowdown when running in the emulator. This is not great but completely acceptable, especially if we can scale it up to numerous machines, and maybe find some further optimizations along the way.

At this point, we have a very basic harness that just decodes an input image using the same Skia interfaces as Android. Let's see how we can make it better fit for fuzzing.

Improvement #1 – custom ASAN-like crash reports

One problem with the loader running under qemu is how crashes are manifested by default:

A native SIGSEGV signal is generated in the emulator and caught by the default libc handler. Let's try this again with gdb attached to see where the exception is thrown:

As we can see, the x86-64 instruction triggering the crash resides in qemu's code generation buffer, and it's hard to trace it to the actual culprit in ARM assembly inside libhwui.so. The native call stack isn't of much help either, as it only shows the qemu internal functions and not the stack frames of the emulated code. Because of all this, working with these raw crashes is incredibly difficult – they are hard to analyze, triage or deduplicate without re-running them on an Android device. There had to be another way to extract accurate information about the emulated ARM CPU context at the time of the crash.

The internal Google fuzzing infrastructure I use for projects like this supports both native crashes (signals) and AddressSanitizer reports. Most importantly, these reports don't have to be 100% identical to legitimate ASAN outputs. They only have to be close enough to be correctly parsed, but they can still contain both fake data (if the specific information is not available in the given context), and some extra sections you don't normally see in ASAN-enabled targets. I have already taken advantage of this behavior a few times in the past, for example in the DrSancov project I published, which aims to convert any closed-source Linux x86(-64) executable into a semi-ASAN/SanitizerCoverage compatible one using the DynamoRIO instrumentation framework. This was my idea here too – if I could register my own signal handler in the harness, it could print out all the relevant context that it has access to within the emulated process, effectively faking an ASAN crash.

The end result is the GeneralSignalHandler function and other unwinding and symbol-related helper routines, which are able to generate pretty crash reports such as the following one:

The first section of the report is essential for automation, as it includes the type of the signal and stack trace used for deduplication. The disassembly and register values are supplementary and mostly useful in triage, to quickly determine what kind of crash we are dealing with.

The extra functionality comes at the cost of slightly more difficult compilation, as Capstone and libbacktrace need to have their headers included, and static/shared objects linked into the loader. Fortunately this didn't turn out to be too hard, as outlined in SkCodecFuzzer's README. If you run into any issues during the building process with SkCodecFuzzer, please refer to the Issues section as several related problems have been resolved there.

In its current shape, the signal handler also includes a few interesting workarounds to problems I didn't originally anticipate and only stumbled upon them during development and testing:

- On Android 10, executable code sections (.text etc.) are marked as Execute Only and are thus non-readable (--x access rights). This caused the signal handler to fail when running on a physical Android device, as Capstone would trigger a nested crash while trying to read the instruction bytes for disassembly. I fixed this with an mprotect call to make the memory readable.

- If the stack is corrupted (e.g. due to a buffer overflow), the stack unwinding code may crash on invalid memory access. Such "double faults" need to be gracefully handled so that the full crash report is always generated correctly. I fixed this with the DoubleFaultHandler and the globals::in_stack_unwinding flag.

- The abort libc function (called e.g. by __stack_chk_fail) disables the delivery of all signals other than SIGABRT, making it impossible to catch nested exceptions in the stack unwinder. I fixed this with a sigprocmask call.

- Crashes occurring at different offsets within standard memory manipulation functions (memcpy, memmove, memset) were wrongly classified as unique, bloating the results and skewing the numbers. I fixed this by detecting these special functions and using their entrypoint addresses in the stack trace, instead of the precise addresses of the faulting instructions.

Improvement #2 – custom low-level allocator (libdislocator)

The custom signal handler is a very useful feature for inspecting and deduplicating crashes, but it helps the most coupled with effective detection of memory safety violations. On Android 9 and 10, Skia uses the default system allocator (jemalloc), which is optimized for performance and not fuzzing. As a result, many tiny out-of-bounds memory accesses may not be detectable at all, as they will just silently fall into the adjacent allocation without corrupting any critical data. In other cases, some bugs may overwrite different adjacent chunks in different test runs due to a non-deterministic heap state, leading to exceptions being thrown further down the line at different locations of the library. All in all, using the default allocator in fuzzing is almost guaranteed to conceal some bugs, and obscure the real root cause of others.

The solution to this problem are allocators specialized for fuzzing, which typically incur a significant memory overhead, but can provide very precise detection of memory bugs at the very moment when they happen. On Windows, examples of such allocators are PageHeap in user-mode and Special Pool in the kernel. On Linux, for closed-source software, there is Electric Fence and of course projects like valgrind for improved bug detection, but my favorite tool for the job is AFL's libdislocator. It is a super lightweight (<300 lines) module that simply implements malloc and free as mmap and mprotect, placing each returned chunk precisely at the end of a mapped memory page. It is easily adjustable, works on x86/ARM, and can be used as both a preloaded .so library, or linked statically into the harness.

In my case, I linked it in statically and redirected allocator calls to it via the malloc_hook mechanism. On Android, enabling these hooks requires setting the LIBC_HOOKS_ENABLE environment variable, which lets us easily switch between libdislocator and jemalloc when needed. Thanks to being able to intercept the heap allocator interface, I could also implement the --log_malloc flag, to log all allocs and frees taking place in the process at runtime. This option proved invaluable to me later during exploit development, as it allowed me to better understand the allocation patterns and identify the crashes most suitable for exploitation.

The entire fuzzing session ran with libdislocator enabled, and I believe that all identified crashes manifested real bugs in the code. At the same time, it is important to note that there are some differences between the custom and default system allocator, which may influence how easy it is to reproduce a libdislocator crash with jemalloc (also detailed in my original bug report in section "3.3. Libdislocator vs libc malloc"):

- There is a hard 1 GB allocation limit enforced by libdislocator, which makes it easier to surface bugs related to memory pressure, but may also mask issues that require large allocations to succeed first.

- libdislocator doesn't adhere to the same allocation alignment rules as jemalloc, meaning that it may return completely misaligned pointers (side note: it is therefore incompatible with software that uses the low pointer bits for tags). This may hide some small out-of-bounds memory accesses (1-7 bytes) on Android, if they happen to fall into the padding area. It's worth noting that the misalignment occurs only in qemu, which doesn't seem to enforce the address alignment requirement on atomic instructions such as LXDR. On Android itself, the harness does correctly align the chunks too, in order to prevent bogus SIGBUS signals being thrown.

- libdislocator fills all new allocations with a 0xCC marker byte to improve detection of use of uninitialized memory. With jemalloc, the contents of each allocated chunk are not guaranteed to have any particular value. Controlling the bytes of a specific fresh allocation may be non-trivial or require the use of "heap massaging" techniques in practical attack scenarios.

With the custom allocator covered, we have arrived at the current form of the SkCodecFuzzer harness. It is time to look beyond it and see how we can achieve even more at the level of the qemu emulator.

Implementing a Qemu fork server

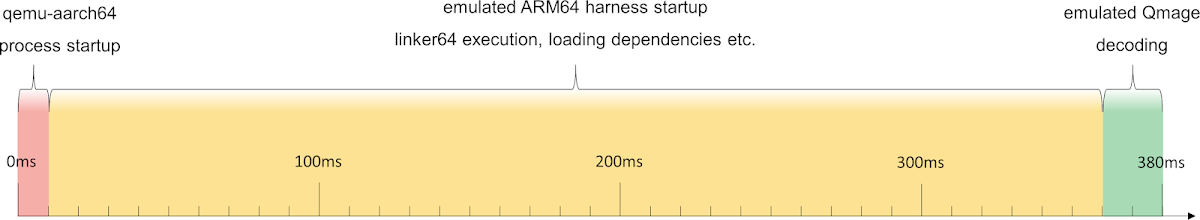

Earlier in the post, I showed how decoding a sample Qmage file with our loader under qemu takes around 380ms. A question arises, what part of it is the qemu start up time, and is there any room for optimization here? We can run a simple test and measure the run time of the loader without any arguments:

It turns out that simply printing out some help and immediately exiting takes 95% of the time it takes to decode a bitmap, indicating that there is a large constant cost of starting the process, which we can try to eliminate or at least significantly reduce. There is a well known solution to this problem called fork server, and the internal Google fuzzing infrastructure supports it, including the ability to resume execution from a user-defined forkserver_main function.

Of course in this case, enabling the fork server is not as easy as flipping a configuration flag, because that would only accelerate the qemu process startup time (already quite short at 10ms). However, the bulk of the overhead (~350ms in our testing so far) comes from bootstrapping the emulated environment before the target main function is reached:

Therefore, we have to get the fork server to fork inside qemu at the point when the emulation reaches the "loader" program entrypoint (at the border of the yellow and green sections). Fortunately, we don't have to figure it all out on our own, as AFL already supports such a mechanism. To make it work with qemu-4.1.1 (the version I was using), I had to modify the code in two places:

- In the load_elf_image function in linux-user/elfload.c, to find the entry point of the loader executable, similarly to how afl_entry_point is initialized in AFL's patch.

- In the cpu_tb_exec function in accel/tcg/cpu-exec.c, to detect when the emulation has reached the entry point and to call into the special forkserver_main routine to activate the fork server, similarly to how the AFL_QEMU_CPU_SNIPPET2 macro executes in AFL's patch.

These two relatively simple modifications were sufficient to cause a dramatic boost of fuzzing performance. Let's look at the numbers from the servers I actually ran the fuzzing on. They're a bit slower than my workstation, so without the fork server, the loader takes on average 1160ms to decode a sample from my corpus. With the fork server, this is reduced to 56ms, which makes it a ~20.5x speed up! And it gets even better when we enable the code coverage collection (discussed in next section) and specify the -d nochain command line flag: in that setting, the average decoding times grow to 6900ms (without fork server) and 147ms (with fork server) respectively, which further widens the gap between them to a factor of ~47x. In fuzzing, the importance of such small yet crucial optimization tricks simply cannot be overstated.

Extracting code coverage – introducing QemuSanitizerCoverage

Another hugely important part of automated software testing is collecting and acting on the code coverage triggered by mutated samples. The fuzzer that I used supports reading .sancov coverage information files generated by the SanitizerCoverage instrumentation. Since the harness already pretends to be an ASAN-enabled target, why not become a SanCov-compatible one too? This is exactly the purpose of the DrSancov project, but it is based on DynamoRIO and thus can only be used with software compatible with the host CPU architecture. So, I had to "port" DrSancov to qemu, creating a mod dubbed QemuSanitizerCoverage.

I began working on the port by looking for a location in the code where the information about each executed basic block passed through. I quickly found the -d exec option (and this helpful blog post), which could be used to print out the kind of data I was interested in, but in textual form. I traced it back to the following snippet:

The above code resides in the familiar cpu_tb_exec function in accel/tcg/cpu-exec.c, which I had already modified to enable the fork server. In here, I only had to add a simple call to my sancov_log_trace() callback, passing itb->pc as the only argument. The actual work happens in the callback itself: if the instruction address resides in a known library, the corresponding cell in its coverage bitmap is marked as visited; if not, the /proc/pid/maps file is parsed to find the shared object or executable. Then, right before qemu exits, the collected coverage is dumped to disk. This is how it looks in practice:

We get an output message similar to the one typically printed by SanitizerCoverage, which informs us that the processing of the sample Qmage file involved 1502 unique basic blocks in libhwui.so. We can take a peek at the coverage data:

There is an 8-byte header indicating the 32-bit format, followed by offsets of basic blocks relative to the start of the .text section in libhwui.so. We can convert the file to a format supported e.g. by Lighthouse and visualize the coverage or use the information directly to maintain an optimal corpus throughout the fuzzing session:

The benefits of having insight into the code coverage of a fuzzing target are well known, but I will emphasize that this feature played a key role in this project. It helped me fill in any gaps in my original input corpus, and get some degree of confidence that this highly extensive codec was tested thoroughly.

Initial file corpus

In my preparation for the first fuzzing attempt of the codec from Samsung Galaxy A50 (Android 9), there were three formats that I needed to find for my corpus: QMv1, QG1.0 and QG1.1. I was able to locate and extract a number of test cases encoded in each of them from the resources of built-in APKs in various Samsung firmwares from the 2014-2016 period, which I deemed sufficient to get myself started. Once I collected the initial data set, I ran a number of test fuzzing sessions during which the corpus continuously evolved thanks to the code coverage feedback. After a few days, it looked nothing like the original set of files: new samples were added, and most initial files were either removed or significantly mutated in the process. I was especially happy to see that a great majority of the files in the resulting corpus were minimized down to 20-50 bytes, which I attribute to the corpus management algorithm which favors shorter samples over longer ones (as described in my BH EU 2016 talk, slides 49-70).

When I learned about the existence of the new QG2.0 format in Android 10, I immediately went looking for such bitmaps in the usual place – embedded APK resources. To my surprise, I didn't find any images encoded in the new format then, and I still haven't seen any such files "in the wild" to date. This meant that I had to improvise. One of my attempts to create samples resembling the QG2.0 format was to take the existing ones in my corpus and hardcode the version in their headers to 2.0. This didn't work out very well as most such files were immediately crashing the codec (instead of hitting some deeper code paths), and I was left with only a few dozen artificial QG2.0 samples that probably didn't have very good coverage. I decided to leave the rest to the fuzzer and hope that over time, it would manage to synthesize much more interesting inputs in the new format.

I was not disappointed. Based on my measurements, after several days of fuzzing, the coverage of the QG2.0-related code paths was comparably good to the coverage of the three older formats. I will go into more detail on the numbers in a later section, but I think it is interesting to note that my December 2019 fuzzing session of the ≤ QG1.1 formats touched 18268 basic blocks in libhwui.so, while my January 2020 session of all ≤ QG2.0 formats had a coverage of 29081 blocks in the same library (and the coverage rate relative to the size of the Qmage codec was similar in both cases, at ~90%). This is a 59% increase, and it goes to show the extent of extra complexity added by Samsung in Android 10. It also seems in line with the size of the Qmage-related code in libhwui.so (mentioned in Part 1), which was 425 kB in Android 9, and 908 kB in Android 10.

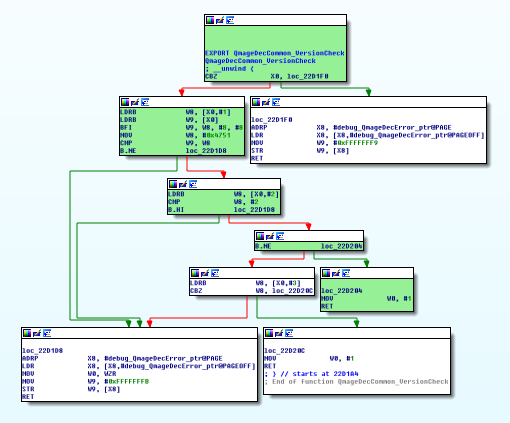

As a last thought, I find it amusing how the fuzzer managed to reach the QG2.0 code paths, considering that this latest format introduces a one-byte checksum, which is verified against the length of the file size in QmageDecCommon_ParseHeader:

Even with this minor obstruction, the fuzzer managed to produce some samples that passed the check (most notably with a length of 257 bytes, which resolves to a 0x00 checksum). At the same time, the post-fuzzing corpus also contained plenty of QG1.2 files, which had me wondering for a long time, because I knew it for a fact that this version didn't exist. When I finally decided to analyze this odd behavior, everything became clear. We have already discussed in Part 1 that the version check in QmageDecCommon_VersionCheck is very permissive and it allows anything ≤ 2.0, so 1.2 passes just fine. But why this specific version? In the SkQmgCodec class, there is a field that denotes the version of the image: 0 for 1.0, 1 for 1.1 and 2 for 2.0. The way it used to be initialized (it seems to be fixed now) was as follows:

- If version == 2.0, then internal_version = 2

- Else if version == X.Y, then internal_version = Y

So according to this logic, QG1.2 files were equivalent to QG2.0 for all intents and purposes, except that they were easier to synthesize due to the lack of checksum verification, which is the reason so many of them wound up in my fuzzer's dynamic corpus. I probably wouldn't have come up with it myself given the non-trivial data flow in the header parsing, and it never ceases to amaze me how basic mutations paired with a coverage feedback loop can lead to such unexpected and clever results.

Mutation settings

The mutation settings I used for the fuzzing were very simple and involved five algorithms: flipping bits, randomly changing bytes, inserting "special" integers, performing arithmetic operations on the data, and cutting+pasting random continuous chunks across the input data stream. I also chained pairs of these mutators together, and occasionally invoked Radamsa. The mutation ratios ranged from 0% to 0.1%.

Results

In this section, I discuss the results of the "final" fuzzing session I ran in January 2020, which uncovered the bugs reported to Samsung in Project Zero Issue #2002.

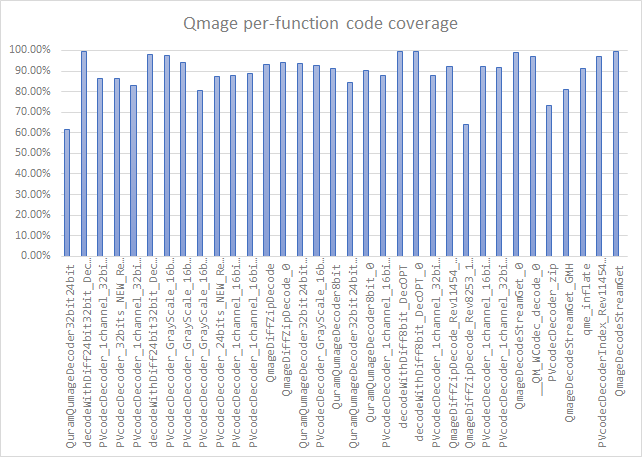

Code coverage

In the case of Qmage, it is difficult to precisely measure the percentage coverage of code relative to the overall size of the codec, because it is just one of many parts of the libhwui.so library, and even the codec itself contains unused and non-reachable code segments that shouldn't be included in the calculations. One way to address this problem is to only count functions with non-zero coverage, assuming that there probably aren't any significant routines completely missed by the corpus. By this metric, I have achieved a 87.30% coverage of the Qmage codec. What is most important, the "heavy" functions responsible for the complex data decoding and decompression are very well covered, with all of them having a coverage rate of >60%, and a great majority being at >80%. The chart below presents the coverage percentage of 34 Qmage functions longer than 4 kB. In total, they sum up to 26670 basic blocks, 23069 of which are covered (86.50%).

On one hand, these rates can be considered a success, but on the other, it may also indicate that 13% of bugs in the code never had a chance to be triggered and are still waiting to be uncovered. That is unless Samsung and/or Quramsoft have since started doing variant analysis or fuzzing of their own, which is easier and more effective with source code access.

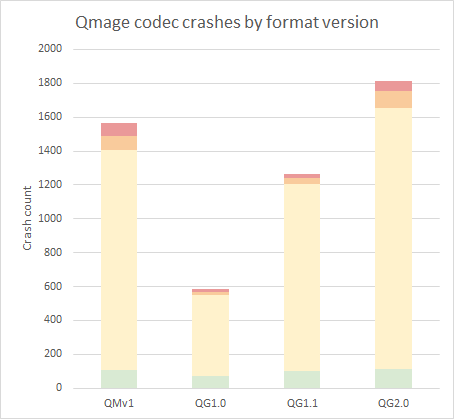

Crashes

Counting both the Android 9 fuzzing session and the subsequent Android 10 session, the fuzzer ran for about four weeks between December 2019 and January 2020. During this time, it identified 5218 unique crashes, where the uniqueness was defined by the three top-level stack trace entries. This number is surely bloated by some bugs which trigger with different call stacks, but still, by any standard, this is a huge number of ways to crash a library. I find it likely that the Qmage codec had never been subject to fuzzing or a manual audit before, and the prevalent lack of bound checks may even suggest that the codec was never supposed to be exposed to untrusted inputs.

Thanks to the detailed ASAN-like reports accompanying the crashes, it was easy to perform some automated triage and classify them based on the signal type, accessed address, and the instruction causing the exception. I assigned each crash to one of the following categories, sorted by descending severity:

The categorization is highly simplified, but it does give some overview of the types of discovered issues. The "write" crashes are the most severe, because they manifest an attempt to write data to an invalid non-zero address, which is evidence of a memory corruption condition. They are followed by invalid reads of ≥ 8 bytes and crashes in generic memory manipulation functions (memcpy), which may indicate attempts to load pointers from invalid locations, or other problems related to the handling of structures or continuous data blobs. Next we have small invalid reads (1, 2 or 4 bytes), which generally manifest simple out-of-bounds reads of the input buffer, and then "sigabrt" (memory exhaustion and likely non-exploitable stack corruption) and "null-deref" (reads or writes to near-zero addresses), both of which are relatively trivial security threats beyond some DoS attacks.

That said, assessing bugs based on their first invalid memory access is not always reliable. For example, a one-byte overread may be directly followed by a buffer overflow, or a four-byte invalid access may manifest a use-after-free condition, which is much more serious than any random out-of-bounds buffer read. And even correctly interpreting the crash reports was no trivial feat; as I noticed shortly after reporting Issue #2002, some crashes were incorrectly classified as "null-deref" even though they were caused by attempted reads of completely invalid, non-canonical addresses. The reason is that when such a wild address is accessed, the siginfo_t.si_addr field received by the signal handler doesn't accurately reflect that address, and instead contains 0x0. This made the ASAN reports look like NULL pointer dereferences and confused my triage script. The solution was to re-analyze the reports by cross-referencing si_addr with the value of the source register, and an update shown in comment #1 was sent to Samsung on the next day.

What we can infer from the summary with some certainty is that upwards of 95% of the crashes were not critical, but they were an indictment on the overall quality of the code. Specifically, the fact that there were so many "read-1" issues shows that most of the parsing in the codec is implemented at a one-byte granularity, and that there were few to no bounds checks while reading from the input stream (until May 2020). In absolute numbers, however, the quantity of the 3.33% memory corruption bugs was still horrendous in my opinion, and it offered a wide selection of options for successful exploitation.

As a last exercise, we can take a peek at the crash counts divided by the Qmage format version:

We can see that a large number of bugs were found in the oldest QMv1 format, however it is not as useful in attacks as the rest, because it is not correctly supported in all contexts on Android. What I find most interesting here is the rising trend in the number of crashes between QG1.0, 1.1 and 2.0, likely correlated with the growing complexity of the codec. In particular, the latest QG2.0 format introduced in Android 10 added as many issues as there had been in 1.0 + 1.1 altogether! And while there was no shortage of vulnerabilities even in Android 9, the new attack surface certainly worked in my favor as a researcher looking to exploit the codec. I'll get ahead of myself and admit that I did use a flaw in the QG2.0 format in my final MMS exploit, which will be discussed in later parts of this series.

What's next?

At this point of the story, it was the beginning of February and I had just reported the crashes to Samsung. I knew that Qmage was a zero-click attack surface reachable through MMS. What's more, I ran some of the samples from the "write" category through the Gallery and My Files apps, to see if any of them would trigger any promising faults. After a few tests, I stumbled upon the following crash in logcat:

The file explorer crashed while trying to execute code from an invalid 0x4a4a4a4a4a4a4a address, which was almost conclusive evidence that the vulnerability could be exploited to execute arbitrary code. This gave me an extra motivational boost to try to write an MMS exploit for a Samsung flagship phone with the then-latest firmware build. As someone relatively new to the Android ecosystem, it was a great opportunity for me to get better acquainted with the system's security model, existing mitigations, and the current state of the art of exploitation. In Project Zero, we often take part in such offensive exercises to put ourselves in the attacker's shoes. Our vulnerability research and exploitation development work leads to structural security improvements, and better drives our and the wider security community's defense efforts.

I had been previously able to find answers to most of my questions regarding the history and inner workings of Qmage, but trying to exploit it generated a completely new set of doubts and challenges I had to face. Some of them were familiar to me as a security engineer, but other seemed completely new:

- Which bug(s) provided the most powerful primitives, while also being relatively easy to understand and work with?

- What objects in memory could be reliably overwritten, and how could they be used to achieve anything useful?

- How to remotely bypass Android ASLR in a constrained MMS environment which mostly works as a one-way communication channel?

- How to keep the Messages app up and running despite triggering repeated crashes?

It took me a few months of experimentation and trial and error to arrive at satisfactory solutions to these problems. In the end, I managed to get all of the moving parts to work together well enough to construct the interaction-less attack. In an attempt to give some structure to the somewhat chaotic process I went through, my next blog post will focus on finding the optimal heap corruption primitive to act as the foundation of any higher-level mechanisms employed by the exploit.

No comments:

Post a Comment