Over The Air - Vol. 2, Pt. 3: Exploiting The Wi-Fi Stack on Apple Devices

Posted by Gal Beniamini, Project Zero

In this blog post we’ll complete our goal of achieving remote kernel code execution on the iPhone 7, by means of Wi-Fi communication alone.

After developing a Wi-Fi firmware exploit in the previous blog post, we are left with the task of using our newly acquired access to gain control over the XNU kernel. To this end, we’ll begin by investigating the isolation mechanisms present on the iPhone. Next, we’ll explore the ways in which the host interacts with the Wi-Fi chip, identify several attack surfaces, and assess their corresponding security properties. Finally, we’ll discover multiple vulnerabilities and proceed to develop a fully-functional reliable exploit for one of them, allowing us to gain control over the host’s kernel.

All the vulnerabilities presented in this blog post (#1, #2, #3, #4, #5, #6, #7) were reported to Apple and subsequently fixed in iOS 11. For an analysis of other affected devices in the Apple ecosystem, see the corresponding security bulletins.

Hardware Isolation

PCIe DMA

Broadcom’s Wi-Fi chips are present in a wide range of platforms; including mobile phones, IOT devices and Wi-Fi routers. To accommodate for this variance, each chip must be sufficiently configurable, supporting several different interfaces for vendors wishing to integrate the chip into their platform. Indeed, Cypress’s data sheets include a wide range of supported interfaces, including PCIe, SDIO and USB.

While choosing the interface with which to integrate the chip may seem inconsequential, it could have far ranging security implications. Each interface comes with different security guarantees, affecting the degree to which the peripheral may be “isolated” from the host. As we’ve already demonstrated how the Wi-Fi chip’s security can be subverted by remote attackers, it’s clear that providing isolation is crucial in sufficiently safeguarding the host.

From a security perspective, both SDIO and USB (up to 3.1) inherently offer some degree of isolation. SDIO solely enables the serial transfer of information between the host and the target device. Similarly, USB allows the transfer of “packets” between peripherals and the host. Broadly speaking, both interfaces can be thought of as facilitating an explicit communication channel between the host and the peripheral. All the data transported through these interfaces must be explicitly handled by either peer, by inspecting incoming requests and responding accordingly.

PCIe operates using a different paradigm. Instead of communicating with the host using a communication protocol, PCIe allows peripherals to gain Direct Memory Access (DMA) to the host’s memory. Using DMA, peripherals may autonomously prepare data structures within the host’s memory, only signalling the host (via a Message Signalled Interrupt) once there’s processing to be done. Operating in this manner allows the host to conserve computing resources, as opposed to protocols that require processing to transfer data between endpoints or to handle each individual request.

Efficient as this approach may be, it also raises some challenges with regards to isolation. First and foremost, how can we be guaranteed that malicious peripherals won’t abuse this access in order to attack the host? After all, in the presence of full control over the host’s memory, subverting any program running on the host is trivial (for example, peripherals may freely modify a program’s stack, alter function pointers, overwrite code -- all unbeknownst to the host itself).

Luckily, this issue has not gone unaddressed. Sufficient isolation for DMA-capable components can be achieved by partitioning the visible memory space available to the peripheral using a dedicated hardware component - an I/O Memory Management Unit (IOMMU).

IOMMUs facilitate a memory translation service for peripherals, converting their addressable memory ranges (referred to as “IO-Space”) into ranges within the host’s Physical Address Space (PAS). Configuring the IOMMU’s translation tables allows the host to selectively control which portions of its memory are exposed to each peripheral, while safeguarding other ranges against potentially malicious access. Consequently, the bulk of the responsibility for providing sufficient isolation lays with the host.

Returning to the issue at hand, as we are focusing on the Wi-Fi stack present within Apple’s ecosystem, an immediate question springs to mind -- which interfaces does Apple leverage to connect the Wi-Fi chip to the host? Inspecting the Wi-Fi firmware images present in several generations of Apple devices reveals that since the iPhone 6 (included), Apple has opted for PCIe to connect the Wi-Fi chip to the host. Older models, such as the iPhone 5c and 5s, relied on a USB interface instead.

Due to the risks highlighted above, it is crucial that recent iPhones utilise an IOMMU to isolate themselves from potentially malicious PCIe-connected Wi-Fi chips. Indeed, during our previous research into the isolation mechanisms on Android devices, we discovered that no isolation was enforced in two of the most prominent SoCs; Qualcomm’s Snapdragon 810 and Samsung’s Exynos 8890, thereby allowing the Wi-Fi chip to freely access the host’s memory (leading to complete compromise of the device).

Inspecting the DMA Engine

To gain some visibility into the isolation capabilities present on the iPhone 7, we’ll begin by exploring the Wi-Fi firmware itself. If a form of isolation is present, the memory ranges used by the Wi-Fi SoC to perform DMA operations and those utilised by the host would be disparate. Conversely, if we happen to find the same ranges of physical addresses, that would hint that no isolation is taking place.

Luckily, much of the complexity involved in reverse-engineering the firmware’s DMA functionality can be forgone, as Broadcom’s SoftMAC drivers (brcm80211) contain the majority of the code used to interface with the SoC’s DMA engine.

Each DMA engine facilitates transfers in a single direction between two endpoints; one representing the Wi-Fi firmware, and another denoting either an internal core within the Wi-Fi SoC (such as when interacting with the RX or TX FIFOs) or the host itself. As we are interested in inspecting the memory ranges used for transfers originating in the Wi-Fi chip and terminating at the host, we must locate the DMA engine responsible for “dongle-to-host” memory transfers.

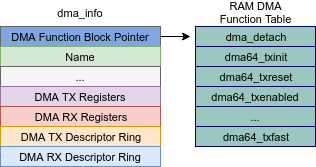

As it happens, this task is rather straightforward. Each “dma_info” structure in the firmware (representing a DMA engine) is prefixed by a pointer to a block of DMA-related function pointers stored in the firmware’s RAM. Since the block is placed at a fixed address, we can locate all instances of the structure by searching for the pointer within the firmware’s RAM. For each instance we come across, inspecting the “name” field encoded in the structure should allow us to deduce the identity of the DMA engine in question.

Combining these two tidbits, we can quickly locate each DMA engine in the firmware’s RAM:

The first few instances clearly relate to internal DMA engines. The last instance, labeled “H2D”, indicates “host-to-dongle” memory transfers. Therefore, by elimination, the single entry left must correspond to transfers from the dongle to the host (sneakily left unnamed!).

Having located the engine, all that remains is to dump the RX descriptor ring and extract the addresses to which DMA transfers are performed. Unfortunately, descriptors are rapidly consumed after being inserted into the corresponding rings, replacing their contents with generic placeholder values. Therefore, observing the value of a non-consumed descriptor from a single memory snapshot is tricky. Instead, to extract “fresh” descriptors, we’ll insert a hook on the DMA transfer function, allowing us to dump descriptor addresses before they are inserted into the corresponding rings.

After inserting the hook, we are presented with the following output:

All of the descriptor addresses appear to be 32-bits wide...

How do the above addresses relate to our knowledge of the physical address space on the iPhone 7? The DRAM’s base address in the host’s physical address space is denoted by the “gPhysBase” variable (stored in the kernel’s BSS). Reading this value from our research platform will allow us to determine whether the DMA descriptor addresses correspond to host-side physical ranges:

Ah-ha! The iPhone 7’s DRAM is based at 0x800000000 -- an address beyond a 32-bit range.

Ah-ha! The iPhone 7’s DRAM is based at 0x800000000 -- an address beyond a 32-bit range.

Therefore, some form of conversion is taking place between the ranges visible to the Wi-Fi chip (IO-Space) and those corresponding to the host’s physical address space. To locate the root cause of this conversion, let’s shift our attention back towards the host.

DART

The host and the Wi-Fi chip communicate with one another using a protocol designed by Broadcom, dubbed “MSGBUF”. Using the protocol, both endpoints are able to transmit and receive control messages, as well as traffic, through a set of “message rings”. Each ring is stored within the host’s memory, but is also made accessible to the firmware through DMA.

Since the rings must be accessible through DMA to the Wi-FI chip, locating the code responsible for their initialisation might shed some light on the process through which their physical addresses are converted to the DMA-accessible addresses we encountered in the firmware’s DMA descriptors.

Reverse-engineering AppleBCMWLANBusInterfacePCIe, we quickly arrive at the function responsible for initialising the IPC structures utilised by the Wi-Fi chip and the host, including the aforementioned rings:

1. void* init_ring(void* this, uint64_t alignment, IOMapper* mapper, ...) {

2. ...

3. IOOptionBits options = kIOMemoryTypeVirtual | kIODirectionOutIn;

4. IOBufferMemoryDescriptor* desc =

6. options,

7. capacity,

8. alignment);

9. ...

11. IODMACommand::OutputLittle64, //outSegFunc

11. 0, //numAddressBits

12. 0, //maxSegmentSize

13. 0, //mappingOptions

14. 0, //maxTransferSize

15. 1, //alignment

16. mapper, //mapper

17. 0); //refCon

18 ...

20. ...

21. }

function 0xFFFFFFF006D1C074

As we can see above, the function utilises I/O Kit APIs to manage and map DMA-capable descriptors.

Upon closer inspection, we can see that IODMACommand defers the actual mapping operations to the provided IOMapper instance (“mapper” in the snippet above). However, as luck would have it, the same “mapper” object is stored within the “PCIe object” we identified in the first part of our research. Therefore, we can proceed to extract the IOMapper instance and begin tracing through its associated code paths.

While the source code for IOMapper is available in the open-sourced portions of XNU, it does not perform any actual mapping operations, but rather delegates them to the “System Mapper” - a globally registered IOMapper instance. Since no concrete subclasses of IOMapper are present in the open-sourced portions of XNU, we can assume that a specialised subclass, performing the actual mapping implementation, exists in one of the proprietary KEXTs.

Indeed, following the extracted IOMapper’s virtual table, we arrive at the IODARTMapper class, under com.apple.driver.IODARTFamily -- it seems a specialised IOMapper is used after all!

Before we continue down the rabbit hole, let’s take a step back and assess the situation. According to Apple’s documentation, DART stands for “Device Address Resolution Table” -- a hardware component integrated into the memory controller, whose purpose it is to provide a separate address space mapping for 32-bit PCI peripherals. DART allows the system to map physical addresses beyond the 32-bit range to peripherals, and to provide fine-grained control over exposed memory ranges to each device. In short, this is non other than a proprietary IOMMU designed by Apple!

Digging deeper into IODARTMapper, we find iovmInsert; the entry point for inserting new IO-Space translations through a mapper. Passing through several more layers of indirection, we finally arrive at an instance of AppleS5L8960XDART.

The latter object originates in a different driver; com.apple.driver.AppleS5L8960XDART. It appears we’re getting closer to the bare-metal DART implementation for the SoC! Oddly, the driver references “S5L8960X”; the product code for the Apple A7 SoC (used in older iPhones, such as the 5s). Perhaps this artefact suggests that the same DART implementation has been used in prior SoC revisions.

Taking a closer look at AppleS5L8960XDART, we quickly come across a function of particular interest. This function performs many bit shifts and masks, much like we’d expect from translation-table management code. After spending some time familiarising ourselves with the code, we come to the realisation that the function is responsible for populating DART’s translation tables! Here is a high-level representation of the relevant code:

1. void* create_descriptors(void* this, uint64_t table_index,

2. uint32_t start_pfn, uint32_t map_size, ...) {

3.

4. ... //Validate input arguments, acquire mutex

5. void** dart_table = ((void***)(this + 312))[table_index];

6. uint32_t end_pfn = start_pfn + map_size;

7.

8. //Populating each L0 descriptor in the range

9. uint32_t l0_start_idx = (start_pfn >> 18) & 0x3;

10. uint32_t l0_end_idx = (end_pfn >> 18) & 0x3;

11.

12. for (uint32_t l0_idx = l0_start_idx; l0_idx <= l0_end_idx; l0_idx++) {

13.

14. //Creating the L1 table if it doesn’t already exist

15. struct l1_table_t* l1_table = (struct l1_table_t*)(dart_table[l0_idx]);

16. if (!l1_table) {

17. l1_table = allocate_l1_table(this);

18. dart_table[l0_idx] = l1_table;

20. uint64_t l0_desc = ((table_phys >> 12) & 0xFFFFFF) | 0x80000000;

22. set_l0_desc(this, table_index, l0_idx, l0_desc);

23. }

24.

25. //Calculating the range of L1 descriptors to populate

26. uint32_t l1_start_idx = (l0_idx == l0_start_idx) ?

27. (start_pfn >> 9) & 0x1FF : 0;

28. uint32_t l1_end_idx = (l0_idx == l0_end_idx) ?

29. (end_pfn >> 9) & 0x1FF : 511;

30.

31. //Populating each L1 descriptor in the range

32. for (uint32_t l1_idx = l1_start_idx; l1_idx <= l1_end_idx; l1_idx++) {

33.

34. //Creating the L2 table if it doesn’t already exist

35. struct l2_table_t* l2_table;

36. l2_table = (struct l2_table_t*)l1_table->l2_tables[l1_idx];

37. if (!l2_table) {

38. l2_table = allocate_l1_desc(this);

39. l1_table->l2_tables[l1_idx] = l2_table;

41. l1_table->descriptors[l1_idx] = (table_phys & 0xFFFFFF000) | 3;

43. ...

44. }

45. }

46. }

47. ... //Release mutex

48. }

49.

50. struct l1_table_t {

51. IOBufferMemoryDescriptor* desc; //Descriptor holding L1 table

52. uint64_t* descriptors; //Kernel VA ptr to L1 descs

53. struct l2_table_t* l2_tables[512]; //L2 descriptors within this table

54. };

55.

56. struct l2_table_t {

57. IOBufferMemoryDescriptor* desc; //Descriptor holding L2 table

58. uint64_t* descriptors; //Kernel VA ptr to L2 descs

59. uint64_t unknown;

60. };

function 0xFFFFFFF0065978F0

Alright! Let’s take a moment to unpack the above function.

For starters, it appears that DART utilises a 3-level translation regime. The first level is capable of holding up to four descriptors, while each subsequent level holds 512 descriptors. Since DART uses a 4KB translation granule, we can deduce that, in ascending order, L2 table maps 0x200000 bytes into IO-Space, while L1 tables map up to 0x40000000 bytes.

In addition to the 3-level regime specified above, DART holds four “base descriptors”. Unlike regular descriptors, these are not indexed by bits in the IO-Space address, but are instead referenced explicitly using a parameter provided by the caller.

Drawing on our knowledge of PCIe, we can speculate on the nature of these “base descriptors”. Perhaps each DART can facilitate mappings for several different PCI peripherals on the same bus, where each “base descriptor” corresponds to one such device (based on the “Requester-ID” encoded in the incoming TLP)? Whether or not this is the case, dumping the “base descriptors” in the DART instance corresponding to the Wi-Fi chip reveals that only the first descriptor is populated in our case.

In order to access the DART mappings, two distinct sets of data structures are utilised in tandem; a set of “convenience” structures which map the translation hierarchy into high-level objects within the kernel’s virtual address space, and another set holding the descriptors themselves, which are linked together based on physical addresses. The former set is used by the kernel to conveniently locate and modify DART’s mappings, while the latter is used by DART’s hardware to perform the actual IO-Space translations.

Looking more closely at the descriptors, it appears that the translation format utilised by DART is proprietary, and does not match the formats present in the ARM VMSA (including those utilised by SMMUs). Nonetheless, we can deduce the descriptors’ composition by inspecting the code above, which constructs and populates descriptors across the translation hierarchy.

L0 descriptors encode the physical frame number (using a 4KB translation granule) corresponding to the next level table in the lower bits, and set the 31st bit to indicate a valid entry. L1 and L2 descriptors, on the other hand, use the bottom two bits to indicate validity (setting both bits denotes a valid entry, other combinations result in translation faults), while the top bits store the physical address of either the next translation table or of the 4KB region mapped into IO-Space.

Lastly, we must deduce IO-Space’s base address to complete our analysis of DART’s translation format. Drawing on our previous encounter with IO-Space addresses stored in the DMA descriptors within the Wi-Fi firmware, all the addresses appeared to be based at address 0x80000000. As such, it seems like a fair assumption that IO-Space mappings for the Wi-Fi chip begin at the aforementioned address.

Combining all of the information above, let’s build a module in our research platform to interact with the DART instance. The module will analyse DART’s translation tables, following the hierarchy described above. By analysing the translation tables, we can subsequently hold a mapping between IO-Space addresses and their corresponding physical ranges within the host’s PAS. Furthermore, we can invert the tables in order to produce a PAS to IO-Space mapping. Using these two mappings we can subsequently convert IO-Space addresses to physical addresses, and vice versa.

Finally, in addition to inspecting IO-Space, our DART module also allows us to manipulate IO-Space, by introducing new mappings into IO-Space containing whichever physical address we desire.

At long last, we can test whether our deductions regarding DART’s structure are indeed valid. First, let’s extract the DART instance corresponding to the Wi-Fi chip. Then, using this object, we can proceed to dump the entire mapping between IO-Space addresses and their corresponding physical ranges by following DART’s translation hierarchy:

Great! The first few mappings appear sane -- each IO-Space address is translated into a corresponding physical range well within the host’s PAS. Moreover, we can see that our assumption regarding DART’s translation granule holds, as some mapped physical addresses are within a 4KB range from one another.

To be absolutely certain that our assessment is valid, let’s perform another short experiment. We’ll map-in an unused IO-Space address, pointing it at a physical address corresponding to “spare” data within the kernel’s BSS. Next, using the DMA hook we inserted previously, we’ll direct unconsumed DMA descriptors at the newly mapped IO-Space address. By doing so, subsequent DMA transfers should arrive at our chosen BSS address.

After inserting the hook and monitoring the mapped BSS range (by reading it through the kernel’s VAS), we are presented with the following result:

Awesome! We managed to DMA into an arbitrary physical address within the kernel’s BSS, thus confirming that our understanding of DART is correct.

Exploring DART

Using our newly acquired control over IO-Space, we can proceed to conduct a few experiments.

For starters, it would be interesting to see whether the kernel integrity mechanisms present on the iPhone 7 (“KTRR”, previously referred to as “AMCC”), still hold in the presence of malicious DMA attempts from the Wi-Fi chip. To find out, we’ll map each of the protected physical ranges (the kernel’s code segments, read-only segments, etc.) into IO-Space, insert the DMA hook, and observe their contents to see whether they were successfully modified.

Unsurprisingly, each attempt to DMA into a protected region results in a fault being raised, subsequently triggering a kernel panic and crashing the device. Attempting to DMA into the KTRR’s hardware registers storing protected region ranges similarly fails -- once the lockdown occurs, no modification of the registers is permitted.

Continuing our analysis of DART, let’s consider another edge-case scenario: assume two subsequent IO-Space mappings correspond to non-contiguous ranges of physical memory. In such a case, should DMA operations crossing the boundary between the two IO-Space ranges be permitted? If so, should the data be split across the corresponding physical ranges? Or should the transfer instead only utilise the first physical range?

To find out, we’ll conduct another experiment. First, we’ll create two IO-Space mappings pointing at disparate regions in the Kernel’s BSS. Then, using the DMA engine, we’ll initiate a transfer crossing the boundary between the two IO-Space addresses.

Running the above experiment and monitoring the resulting addresses through the kernel’s VAS, we are presented with a positive result -- DART correctly splits the transaction into the two corresponding physical ranges, thus never exceeding any of the mapped-in regions’ bounds.

So far, so good.

PCIe Configuration Space

Continuing our investigation of DART, we arrive at another query -- how does DART perform context determination? Namely, how does DART differentiate between the components issuing the memory access requests?

Depending on DART’s architecture, several solutions to this question exist. If each DART is assigned to a single component or a single PCIe bus, no identification is needed, as it can simply funnel all operations from that origin through its translation mechanism. Alternately, if several PCIe components exist on the bus to which DART is assigned, it could utilise the “Requester ID” (RID) field in the PCIe TLP to identify the originating component.

Using the RID for context determination is not risk-free, as malicious PCIe components may attempt to “spoof” the contents of their TLPs. To deal with such scenarios, PCIe introduced Access Control Services (ACS), allowing PCIe switches to perform routing decisions, including disallowing transfer of certain TLPs based on their encompassed IDs. As we are not aware of the PCIe topology on the iPhone, it remains unknown whether such a configuration is needed (or used).

With regards to control over the PCIe TLPs, Broadcom’s Wi-Fi chips expose much of the PCIe Core’s functionality to the Wi-Fi firmware by mapping the core’s registers through a fixed backplane address. Previous Broadcom SoC revisions, which incorporated PCIe Gen 1 cores, allowed access to several “diagnostic” registers (via pcieindaddr / pcieinddata), which govern over the physical (PLP), data link (DLLP) and transport (TLP) layers of PCIe. Regardless, it is unknown whether the this mechanism allows modification of the RID, or indeed whether this form of access is still present in current-gen Broadcom hardware.

Nevertheless, standardised PCIe mechanisms exist which may also affect the RID’s composition. For instance, PCIe 3.0 introduced Alternate Routing-ID Interpretation (ARI), which modifies the encoding of the RID, eliminating the “device” field while expanding the “function” field to 8 bits.

While normally the PCIe Configuration Space is accessed through the host, Broadcom’s Wi-Fi SoC exposes the configuration space within the Wi-Fi SoC, through a pair of backplane registers corresponding to the PCIe Core (configaddr / configdata). Using these registers, the Wi-Fi firmware can not only read the PCIe Configuration Space, but also modify values within it. Like many advanced PCIe features, ARI is exposed in the configuration space through an “extended capability” blob; therefore, if ARI is supported by the PCIe core, we could utilise our access to the configuration space to enable the feature from the Wi-Fi firmware.

To determine whether such capabilities are present in the PCIe core, we’ll produce a dump of the configuration space (using the aforementioned register pair). After doing so, we can simply reorganise the contents in a format legible to lspci, and instruct it to parse the given data, producing a human-readable representation of the features supported by the PCIe core:

Scanning through the above capabilities, it appears that none of the “advanced” PCIe features (such as ARI) are supported by the PCIe core.

Exploring IO-Space

While we’ve already determined how DART facilitates the IO-Space mapping for the Wi-Fi chip, we have yet to investigate the contents of the memory exposed through this mechanism. In order to investigate IO-Space’s contents, we’ll use a two-stage translation process; first, we’ll use our DART module to produce a mapping between the IO-Space addresses and their corresponding physical ranges. Once we obtain the mapped physical ranges, all that remains is to map these ranges into the kernel’s VAS, allowing us to subsequently dump their contents using our research platform.

As we know, the mapping from virtual to physical addresses is governed by the MMU’s translation tables. On ARMv8-A platforms (such as the iPhone 7), the ARM Virtual Memory System Architecture (VMSA) specifies the format of the translation tables utilised by the ARM MMU. Like any XNU task, the kernel’s translation tables are accessible through its task_t structure (exported through its data segment). Following the entries in the task structure, we arrive at its pmap, holding the translation tables.

Using our new module, we can now perform translations between the virtual addresses in the kernel’s VAS and physical ones. Furthermore, we can invert the translation table, producing a (one-to-many) mapping from physical to virtual addresses. In tandem with our DART module, this allows us to take each IO-Space address, convert it to a physical address, and then use our inverted translation table to convert it back to a virtual address in the kernel’s VAS.

Consequently, we can now iterate over the entire IO-Space exposed to the Wi-Fi chip, extracting the contents of every mapped region:

After producing a copy of the entire contents of IO-Space, we can now comb through it, searching for any “accidental” mappings that might be beneficial for a would-be attacker present on the Wi-Fi chip.

For starters, recall that the kernel protects itself against remote attackers by utilising KASLR. This mitigation introduces a randomised “slide” value, which is added to the kernel’s base loading address (both virtual and physical). Since many exploits rely on the ability to pre-calculate addresses within the kernel’s VAS, such a mitigation may slow down attackers, or hinder the reliability of exploits targeting the kernel.

However, as the same “slide” value is applied globally, it is often the case that a single “leaked” kernel VAS address results in a KASLR bypass (allowing attackers to deduce the slide’s value). Therefore, if any kernel virtual address is accidentally leaked in an IO-Space mapped page, the Wi-Fi chip may be able to similarly subvert KASLR.

Apart from the potential implications regarding KASLR, the presence of any kernel VAS pointer in IO-Space would be worrisome, as the pointer might be utilised by kernel code. Allowing a malicious Wi-Fi chip to corrupt its value may subsequently affect the kernel’s behaviour (perhaps even resulting in code execution).

To find out whether any kernel pointers are exposed through IO-Space, let’s scan through the extracted IO-Space pages, searching for 64-bit words corresponding to addresses within the kernel’s VAS. After going through every single page, we are greeted with a negative result; we can find no kernel VAS pointers in any IO-Space mapped page!

With a cursory investigation of IO-Space out of the way, we can now dig deeper, attempting to gain a better understanding of the IO-mapped contents. To this end, we’ll combine several approaches:

Inspect each page’s contents to look for hints regarding its role

Locate the kernel code responsible for interacting with the same IO-Space range

Check the IO-Space address against posted addresses in the Wi-Fi firmware

Use the Android driver as reference for any “strange” unidentified constructs

After performing the above steps, we are finally able to piece together a complete mapping of IO-Space (thus also concluding that no “accidental” mappings are present). It is important to note that since IO-Space is not subject to randomisation, the IO addresses are constant, and are not affected by the KASLR slide.

Searching For Vulnerabilities

Having explored the aspects relating to DART, IO-Space mappings, and low-level components, let’s proceed to inspect the more traditional attack surfaces exposed by the host.

Recall that the Wi-Fi chip and the host communicate with one another through a series of “rings”, mapped into IO-Space. Each ring facilitates the transfer of information in a single direction; either from the device to the host (D2H), or vice versa (H2D).

Among the messages transferred through message rings, “Control Messages” represent a rather abundant attack surface. These message are used to instruct the firmware to perform complex state-changing operations, such as creating additional message rings, deleting them, and even transporting high-level requests (ioctls) to be processed by the firmware.

Due to their complexity, control messages rely on a bidirectional communication channel; the “Control Submit” ring (H2D) allows the host to submit the requests to the device, while the “Control Complete” ring (D2H) is used by the device to return the results back to the host.

After committing messages to the D2H rings, the Wi-Fi firmware signals the host by writing to a “MailBox” register and triggering an MSI interrupt. This interrupt is subsequently handled by the host, which inspects the MailBox register, and notifies the corresponding (D2H) rings that data may be available for processing.

Tracing through the above flow, we reach the handler function for processing incoming control messages within the host. To assist in reverse-engineering these messages, we’ll utilise Broadcom’s Android driver (bcmdhd), which contains the definitions for the control structures, as well as the message codes corresponding to each request.

AppleBCMWLANBusPCIeInterface::drainControlCompleteRing

The encapsulating handler simply reads the “message type” field, and proceeds to delegate the message’s processing to a dedicated handler -- one per message type. Going over each of the handlers, we stumble across a memory corruption bug triggerable by the firmware. Incidentally, the bug was present in a handler for a message type which isn’t available in the Android driver.

Moving on, let’s set our sights on slightly higher targets in the protocol stack. Recall that control rings are also used to carry high-level control requests from the host to the firmware, dubbed “ioctls”. Each ioctl allows the host to either set a firmware-specific configuration value, or to retrieve its current value. As this channel is quite versatile, much of the high-level interaction between the host and the firmware is enacted through this channel, including retrieving the current channel, setting network configurations, and more.

However, like any other signal originating from the device, it is important to remember that “ioctls” can be co-opted by malicious Wi-Fi firmware. After all, an attacker controlling the Wi-Fi firmware can simply hook the “ioctl” handling function, thereby allowing full control over the contents transmitted back to the host.

Reverse-engineering the high-level driver, AppleBCMWLANCore, we quickly identify the entry point responsible for issuing ioctl requests from the host to the Wi-Fi firmware. Cross referencing the function, we find nearly 500 call sites, several of which act as wrappers for common functionality, thus revealing even more originating call sites. After going over each of the aforementioned sites, we discover several memory corruptions in their corresponding handlers.

Lastly, there’s one more communication channel to consider -- Broadcom allows the in-band transmission of “event packets” from the Wi-Fi firmware to the host. These frames, denoted by a unique EtherType (0x886C), carry unsolicited events from the firmware, requiring special handling by the host. Tracing through the host’s RX path brings us to the entry point for handling such frames:

Once again, going over each handler in the above function (while using the Android driver to assist our understanding of the corresponding event codes and data structures), we discover two more vulnerabilities.

Better Vulnerabilities

Data Races?

While the vulnerabilities we just discovered allow us to trigger several forms of memory corruptions in the host (OOB writes, heap overflows), and even to leak constrained data from the host to the firmware, reliably exploiting any of them remains rather challenging.

For starters, the Wi-Fi chip has no visibility into the host’s memory (apart from the IO-Space mapped regions), and relatively little control over objects allocated within the kernel. Therefore, grooming the kernel’s memory in order to successfully launch a heap memory corruption attack would require significant effort. What’s more, this challenge is compounded by the presence of KASLR, preventing us from accurately locating the kernel’s data structures (barring any information disclosure).

Nonetheless, perhaps we can identify better primitives by digging deeper!

So far, we’ve only considered the contents of the data transferred between the host and the firmware. Effectively, we were thinking of the firmware and the host as two distinct entities, communicating with one another through an isolated communication channel. In fact, nothing can be further from the truth -- the two endpoints share a PCIe interface, allowing the firmware to perform DMA accesses at will to any IO-Space address.

One of the major risks when using a shared memory interface is the matter of timing. While the host and firmware normally synchronise their operations to ensure that no data races occur, attackers controlling the Wi-Fi firmware are bound by no such agreement. Using our control over the Wi-Fi chip, we can intentionally modify data structures within IO-Space as they are being accessed by the host. Doing so might allow us to introduce race conditions, such as TOCTTOUs, creating vulnerable conditions in otherwise safe code (under normal assumptions).

The first target for such modification are the control messages we inspected earlier on. Inspecting the control ring handler in the host, it appears that the messages are read directly from the IO-Space mapped buffer, raising the possibility for data races in their processing. Nonetheless, going over the relevant code paths, we find no security-relevant races.

What about the second control channel we reviewed -- event packets? Perhaps we could modify a packet’s contents while it is being processed, thereby affecting the kernel’s behaviour? Once again, the answer is negative; each transferred packet is first copied from its IO-Space mapped buffer to a kernel-resident mbuf before subsequently passing it on for processing, thus eliminating the possibility of firmware-induced races.

Message Rings, Revisited

So far, we’ve inspected the high-level functionality provided by message rings, namely, the control messages transported therein. However, we’ve neglected several aspects of their operation. One implementation detail of particular note is the method through which rings allow the endpoints to synchronise their accesses to the ring.

To allow concurrent accesses by both the ring’s consumer and its corresponding producer, each ring is assigned a pair of indices: a read index specifying the location up to which the consumer has read the messages, and a write index specifying the location at which the next message will be submitted by the producer. As their name implies, each ring forms a circular buffer -- upon arriving at the last ring index, the indices simply wrap around, returning back to the ring’s base.

Since both endpoints must be aware of the ring indices to successfully coordinate their access, a mechanism must exist through which the indices may be shared between the two. In Apple’s case, this is achieved by mapping all the indices into IO-Space mapped buffers.

While mapping the indices into IO-Space is a convenient way to share their values, it is not risk-free. For starters, if all the above indices are mapped into IO-Space, a malicious Wi-Fi chip may not only utilise DMA access to read them, but may also be able to modify them.

This form of access is excessive -- after all, the device need only update the read indices for H2D rings, and the write indices for D2H rings. The remaining indices should, at most, be read by the device. However, as DART’s implementation is proprietary, it is unknown whether it can facilitate read-only mappings. Consequently, all of the above indices are mapped into IO-Space as both readable and writable, thus allowing a malicious Wi-Fi chip to freely alter their values.

This IO-Space-based index sharing mechanism raises an important question; what if a Wi-Fi chip were to maliciously modify a ring’s indices while the ring is being processed by the host? Would doing so introduce a race condition? To find out, let’s take a look at the function through which the host submits messages into H2D rings:

1. void* AppleBCMWLANPCIeSubmissionRing::workloopSubmitTx(uint32_t* p_read_index,

2. uint32_t* p_write_index) {

3.

4. //Getting the write index from the IO-Space mapped buffer (!)

5. uint32_t write_index = *(this->write_index_ptr);

6.

7. //Iterating until there are no more events to process

8. while (this->getRemainingEvents(p_read_index, p_write_index)) {

9.

10. //Calculate the next insertion address based on the write index

11. void* ring_addr = this->ring_base + this->item_size * write_index;

12. uint32_t max_events = this->calculateRemainingWriteSpace();

13.

14 //Writing the current events to the ring

15. uint32_t num_written = this->submit_func(..., ring_addr, max_events);

16. if (!num_written)

17. break; //No more events to process

18.

19. //Update the write index

20. write_index += num_written;

21. if (write_index >= this->max_index) {

22. write_index = 0; //Wrap around

23.

24. //Commit the new index to the IO-Space mapped buffer (!)

25. *(this->write_index_ptr) = write_index;

26. }

27. ...

28. }

29.

30. class AppleBCMWLANPCIeSubmissionRing {

31. ...

32. uint32 max_index; //The maximal ring index (off 88)

33. uint32 item_size; //The size of each item (off 92)

33. uint32_t* read_index_ptr; //IO-Space mapped read index pointer (off 174)

34. uint32_t* write_index_ptr; //IO-Space mapped write index pointer (off 184)

35. void* ring_base; //IO-Space mapped ring base address (off 248)

36. }

function 0xFFFFFFF006D36D04

Alright! Looking at the above function immediately raises some red flags…

The function appears to read values from IO-Space mapped buffers in several different locations, seemingly making no effort to coordinate the read values. This kind of pattern opens the door to the possibility of race conditions induced by the firmware.

Let’s focus on the “write index” utilised by the function. At first, the index is fetched by reading its value directly from the IO-Space mapped buffer (line 5). This same value is then used to derive the location to which the next ring item will be written (line 11). Crucially, however, the value is not used in any shape or form by the surrounding verifications utilised by the function to decide whether the current ring indices are valid (lines 8, 12).

Therefore, the verification methods must re-fetch the indices’ values, introducing a possible discrepancy between the value used during verification, and the one used to place the next item.

To exploit the above issue, an attacker controlling the Wi-Fi chip can DMA into the ring indices in order to introduce one value for the ring address calculation (line 5), while quickly switching the index to a different, valid value, for the remaining validations (lines 8, 12). If the above race is executed successfully, the following H2D item will be submitted by the host at an arbitrary attacker-controller offset from the ring’s base, triggering an out-of-bounds write!

Removing The Race Condition

While the above primitive is no doubt useful, it has one inherent downside -- performing a data race from an external vantage point may be a difficult feat, especially considering the platform we’re executing on (an ARM Cortex R) is significantly slower than the targeted one (a full-blown application processor).

Perhaps by gaining a better understanding of the primitive, we can deal with these limitations. To this end, let’s take a closer look at the validation performed by the submission function:

1. uint32_t AppleBCMWLANPCIeSubmissionRing::calculateRemainingWriteSpace() {

2.

3. uint32_t read_index, write_index;

4. this->getIndices(&read_index, &write_index);

5.

6. //Did the ring wrap around?

7. if (read_index > write_index)

8. return read_index - (write_index + 1);

9. else

10. return this->max_index - write_index + (read_index ? 0 : -1);

11. }

12.

13. void AppleBCMWLANPCIeSubmissionRing::getIndices(uint32_t* rindex,

14. uint32_t* windex) {

15. uint32_t read_index = *(this->read_index_ptr);

16. uint32_t write_index = *(this->write_index_ptr);

17. if (read_index >= 0x10000 || write_index >= 0x10000)

18. panic(...);

19. *rindex = read_index;

20. *windex = write_index;

21. }

Ah-ha! Looking at the code above, we can identify yet another fault.

When fetching the ring indices, the getIndices function attempts to validate their values to ensure that they do not exceed the allowed ranges. This is undoubtedly a good idea, as it prevents corrupted values from being utilised (which may result in memory corruption).

However, instead of comparing the indices against the current ring’s capacity, they are compared against a fixed maximal value: 0x10000. While this value is certainly an upper bound on the rings’ capacities, it is far from a tight bound (in fact, most rings only hold several hundred items at-most).

Therefore, observing the code above we reach two immediate conclusions. First, if we were to attempt a race condition whereby the ring index is modified to a value larger than the fixed bound (0x10000), we run the risk of triggering a kernel panic should the race attempt fail (line 18). More importantly, however, modifying the write index to any value below the fixed bound (but still above the actual ring’s bounds), will allow us to pass the validations above, resulting in an out-of-bounds write with no race-condition required.

Using the above primitive, we can target any H2D ring, causing the next element to be reliably inserted at an out-of-bounds address within the kernel’s VAS! While the affected range is limited to the ring’s item size multiplied by the aforementioned fixed bound, as we’ll see later on, that’s more than enough.

Triggering the Primitive

Before pressing on, it’s important that we prove that the scenario above is indeed feasible. After all, many components within the kernel might utilise the modified ring indices, which, in turn, may enforce their own validations.

To do so, we’ll perform a short experiment using our research platform. First, we’ll select an H2D ring, and fetch its corresponding object within the kernel. Using the aforementioned object, we can then locate the ring’s base address, allowing us to inspect its contents. Now, we’ll modify the ring indices by utilising the firmware’s DMA engine, while concurrently monitoring the kernel virtual address at the targeted offset for modification. If the primitive is triggered successfully, we should expect an item to be inserted at the target offset from the ring’s base address.

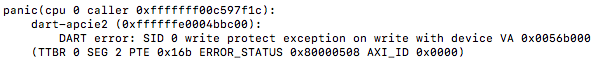

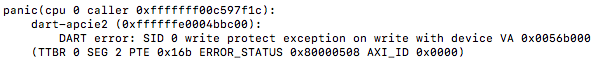

However, running the above experiment results in a resounding failure! Every attempt to trigger the out-of-bounds write results in a kernel panic, thereby crashing the device. Inspecting the panic logs reveals the source of this crash:

It appears that when executing our attack, the firmware attempts to perform a DMA read operation from an address beyond its IO-Space mapped ranges! Taking a moment to reflect on this, the source of the error is immediately apparent: since both the firmware and the host share the ring indices through IO-Space, modifying the aforementioned values affects not only the host, but also the firmware’s implementation of the MSGBUF protocol.

Namely, the firmware attempts to read the ring’s contents using the corrupted indices, resulting in an out-of-bounds access to IO-Space, triggering the above panic.

As we have control over the firmware, we could simply try to intercept the corresponding code paths in its MSGBUF implementation, thus preventing it from issuing the malformed DMA request. Unfortunately, this approach is easier said than done - the firmware’s implementation of MSGBUF is woven into many code-paths in both the ROM and RAM; attempting to patch-out each part results in either breakage of a different component, or in undesired side-effects.

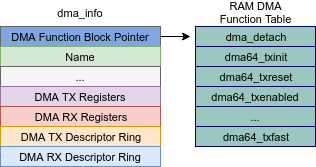

Instead of addressing the sources of the DMA transfers, we’ll go straight to the target -- the engine itself. Recall that each DMA engine on the firmware is accessible through an instance of a single structure (dma_info). Changing the DMA engine’s backplane register pointers within the dma_info structure would mean that while the calling code-paths are able to continue issuing malformed DMA requests, the requests themselves are never actually received by the DMA engine, thus preventing us from triggering a fault.

Indeed, incorporating the above patch into our vulnerability trigger, we can now freely modify the ring indices without inducing a crash. Furthermore, inspecting the corresponding kernel virtual at the targeted index, we can see that our overwrite is finally successful!

Devising An Exploit Plan

Having concluded that the primitive is usable, we can now proceed to the next stage -- devising an exploit plan. Namely, we must decide on a data structure to target using the exploit primitive, which may allow us to either modify the kernel’s behaviour, or otherwise gain a useful primitive bringing us closer to that goal.

So which data structure should we target? As we do not have any visibility into the kernel’s address space, reliably locating structures within the kernel presents quite a challenge. What’s more, our primitive only allows limited control over the written content (namely, the data written by the host is an H2D ring item). On top of that, each OOB element can only be written at offsets which are multiples of the ring’s item size, thus introducing alignment constraints.

The above limitations make reliable exploitation rather difficult. Alas, if only there were a data structure whose internal composition were relatively flexible, and to which a single modification would grant us complete control over the host…

...But of course, we’ve already come across the perfect target -- DART’s translation tables!

Recall that DART’s translation tables govern over the mapping between IO-Space and the host’s physical address space. If we were able to use our primitive in order to modify the tables, we might be able to introduce new mappings into IO-Space, pointing at arbitrary physical ranges within the host’s PAS. Mapping in arbitrary physical memory into the Wi-Fi chip is a nearly ideal primitive, as it would allow the chip to modify any data structure used by the kernel, leading to trivial code execution.

In order to successfully carry out such an attack, we must first figure out whether DART’s translation tables indeed constitute valid targets for the vulnerability primitive. Namely, we must figure out whether they reside within the primitive’s scope of influence.

However, scanning through the memory ranges within the primitive’s scope, we quickly come to the realisation that the placement of objects following the message rings is highly variable. Indeed, each device reboot yield an entirely different layout, thus preventing us from relying on any particular object being placed at any given offset from a message ring.

Perhaps we’re out of luck…?

Shaping IO-Space

...Instead of relying of lucky placement of nearby objects, let’s take matters into our own hands.

In order to place a DART translation table within the primitive’s scope, we’d need to either move a translation table into the primitive’s scope, or to move one of the message rings, thus shifting the primitive’s scope across different regions of the kernel’s memory.

The former approach seems infeasible; DART’s translation tables are only allocated when the IO-Space mappings are first populated (namely, when the Wi-Fi chip is first initialised). Once the mapping is complete, all of DART’s translation tables remain in their fixed positions within the kernel’s VAS.

But what about moving the rings? While control rings are immovable, a second set of ring exists -- “flow rings”. Flow rings are H2D rings used to facilitate the transfer of outgoing (TX) traffic. They do not carry the traffic itself, but rather notify the device of the transmitted frame’s metadata (including the IO-Space address at which its actual content is stored).

Unlike control rings, flow rings are far more “flexible”. Individual flows are dynamically added and removed as the need arises, by sending a corresponding control message from the host to the device. Each flow is identified by its endpoints (source and destination MAC), their encompassed protocol (i.e., EtherType), and their “priority”.

Perhaps we can use this dynamic nature of flow rings to our advantage. For example, if we were to delete a flow ring, it might subsequently get re-allocated at a different location in the kernel’s memory, thus shifting the scope of our OOB primitive to a possibly more “interesting” patch of objects.

Normally, deleting a flow ring is a two way process; the host sends a deletion request, which is subsequently met by a corresponding message from the device, signalling a successful deletion. However, inspecting the host’s implementation of the above messages, it appears we can just as well skip the first half of the exchange, and send an unsolicited deletion response from the device:

1. uint32_t AppleBCMWLANBusPCIeInterface::completeFlowRingDeleteResponseMsg(

4. //Is the ring ID within bounds?

5. if (msg->flow_ring_id < this->min_flow ||

6. msg->flow_ring_id >= this->max_flow) {

7. ...

8. }

9. //Does a flow ring exist at the given index?

10. else if (this->flow_rings[msg->flow_ring_id]) {

11. this->deleteFlowCallback(msg->status, msg->flow_ring_id);

12. ...

13. return 0;

14. }

15. else {

16. ...

17. return 0xE00002BC;

18. }

19. }

function 0xFFFFFFF006D2FD44

Doing so causes an interesting side-effect to occur: instead of completely deleting the ring, the host decrements a single reference count on the ring object, which is insufficient to bring down the total count to zero (the missing release was meant to be performed by the code responsible for sending the deletion request in the first place).

Consequently, the flow ring is left mapped into IO-Space, but is unusable by the host. As such, newly allocated flow rings cannot inhabit the same IO-Space range (as it remains occupied by the unusable ring), and must instead be carved from higher IO-Space addresses.

This primitive has several interesting side-effects.

For starters, it allows us to re-allocate flow rings, thus moving around their base addresses within the kernel’s VAS, recasting the net over potentially interesting objects within the kernel.

More importantly, however, this primitive allows us to force the allocation of a brand new DART L2 translation table. Since each L2 translation table can only map a fixed range into IO-Space, by continuously leaking flow rings we are able to exhaust the available space in the L2 table, thereby forcing DART to allocate a new table from which the next IO-Space addresses are carved.

Lastly, as luck would have it, since both the rings themselves and DART’s translation tables are carved using the same allocator (IOMalloc), and have similar sizes, they are both carved from the same “zone” of memory. Therefore, by continuously leaking IO-Space addresses and creating new flow rings until a new DART L2 translation table is formed, we can guarantee that the new table will be placed in close proximity to the following flow ring, thereby placing the L2 translation table within our primitive’s scope!

Putting it all together, we can finally reach a reliable placement of DART translation tables in close proximity to a flow ring, thereby allowing us to overwrite entries in the translation tables with flow ring items.

Flow Ring Items vs. DART Descriptors

To understand whether flow ring items make good candidates to overwrite DART descriptors, let’s take a moment to inspect their structure. As these items are present in the same form in the Android driver, we are spared the need to reverse-engineer them:

So how does the above structure relate to a DART descriptor?

As the above structure has a 64-bit aligned size, and ring items are always placed in increments of the same size, we can deduce that each quadword in the above structure will reside in a 64-bit aligned address. Similarly, DART descriptors are 64-bits wide, and are placed in 64-bit aligned addresses. Therefore, each aligned quadword in the above structure serves as a potential candidate for replacing a DART descriptor.

However, going over the above quadwords, it is quickly apparent that no fully-controlled word exists within the structure. Indeed, the first and last word are composed of mostly constant values, whereas the third and fourth contain IO-Space addresses (whose forms are incompatible with DART descriptors). Nonetheless, taking a closer look, it appears that the second word is at least somewhat malleable. Its lower six bytes are governed by the destination MAC address to which the frame is being transmitted, while the two upper bytes contain the beginning of our source MAC.

Assuming we could cause the host to send frames to a MAC address of our choosing, that would grant us control over the lower six bytes. However, the remaining two bytes are populated using our device’s MAC address, a much harder target for modification...

Spoofing The Source MAC?

To understand whether we can indeed modify the device’s MAC address, let’s take a closer look at the mechanisms through which the MAC address may be programmable on the Wi-Fi chip.

Like many production devices, Broadcom’s Wi-Fi chips allow the storage of chip-specific configuration using one of two mechanisms; either by using a block of Serial Programmable ROM (SPROM) or by utilising a set of One Time Programmable (OTP) fuses. The Wi-Fi chip present on the iPhone 7 uses the latter mechanism.

As for the host, it stores the Wi-Fi chip’s MAC address in the “device tree” (among many other device-specific properties). The “device tree” is a simple hierarchical representation of hardware components utilised by the platform (much like its Linux counterpart, bearing the same name), allowing consumers within the kernel to easily access (and populate) its nodes.

During the Wi-Fi chip’s initialisation, the AppleBCMWLANCore driver retrieves the contents of the chip’s OTP fuses (using the PCIe BARs), and proceeds to parse them according to the PCMCIA Card Information Structure (CIS) format. Reverse-engineering the parsing functions in the kernel, it is quickly apparent that one tag in particular bears significance with regards to our pursuits.

If a “Function Extension” tag is encountered in the CIS data embedded in the OTP, the kernel will extract the MAC address encapsulated within it, and insert it into the “local-mac-address” node in the device tree, representing the Wi-Fi MAC address!

Extracting the stored OTP contents from the kernel, we can see that no such element is present in the OTP contents to begin with, thus allowing us to insert our own tag without fear of causing a collision:

Wi-Fi Chip OTP

Therefore, to change the MAC address, all we’d need to do is fuse the corresponding bits into the OTP, thus inserting the new CIS tag. However, this is easier said than done. For starters, writing to the OTP is a risky operation, and may result in permanent damage to the chip if done incorrectly. Moreover, as it’s name implies, writing to the OTP is a one-time operation, leaving no room for error. Perhaps we could avoid changing the MAC after all?

After discussing the above situation, my colleague Ian Beer suggested an alternative!

Why not, instead, check if the high-order bits in the DART descriptor are actually being used for the translation process? To test this suggestion, we’ll use the research platform to insert a valid L2 descriptor into DART, with one small caveat -- we’ll change the two upper bytes in the 64-bit descriptor to “corrupted” values. After inserting the mapping, we can simply insert a DMA hook into the firmware, performing a DMA access to the aforementioned address.

Running the experiment above we are greeted with a positive result! Indeed, the upper bytes of the DART descriptor are ignored by the translation process, thus sparing us the need to modify the MAC.

Spoofing The Destination MAC

Having confirmed that modifying the source MAC is no longer a barrier, all that remains is to cause the host to send a frame to a crafted MAC address, thus allowing us to control the six significant bytes within our 64-bit word.

Naturally, one way to solicit a response from the host is to transmit an ICMP Echo Request (ping) to it, subsequently triggering a corresponding ICMP Echo Response to be sent in response. While this approach can easily trigger the transmission of frames from the host, it only allows frames to be transmitted to known destinations, but does not offer control over the destination MAC.

To trigger communications to our target MAC, we’ll first launch an ARP Spoofing attack; sending a crafted ping from an arbitrary (unused) IP address, thereby causing the host to send an “ARP Request” querying the MAC address of the crafted IP, to which we’ll respond a response encoding our own MAC address, thus associating the IP address with a crafted MAC value.

However, several problems arise when using this method. First, recall that the MAC address is meant to masquerade as a valid DART L2 Descriptor. As we’ve seen in our analysis of the descriptor formats, every valid L2 descriptor must have the two least-significant bits set. This poses somewhat of a problem for MAC addresses, as their bottom bits bear special significance:

Setting the bottom two bits in the MAC address would indicate that it is a broadcast / multicast address. As we are sending unicast traffic (and are expecting a unicast response), it might be difficult to solicit such responses from the host. Furthermore, any network-resident security devices might inspect the traffic and flag it as suspicious (especially as we are executing a classical ARP spoofing attack). What’s more, the router or access point may refuse to route unicast traffic to a broadcast MAC.

To get around the above limitations, we’ll simply inject the traffic directly from the firmware, without transmitting it over the air. To achieve this goal, we’ve written a small assembly stub that, when executed on the firmware, injects the encapsulated frames directly into the host, as if it were transmitted over the network.

This allows us to inject even potentially malformed traffic that would not have been routable (like unicast traffic from a broadcast MAC). Indeed, after running the ARP spoofing vector with the above mechanism, we are able to solicit responses from the host to our crafted (broadcast) MAC address (XNU does not object to sending unicast traffic to broadcast MACs). Great!

Inception

Finally, all the ducks are lined up in a row -- we can solicit traffic to MAC addresses of our choosing (even broadcast MACs), without having to modify the source MAC. Furthermore, we can shape IO-Space in order to force a new DART translation table to be allocated following a flow ring within the kernel’s VAS. Therefore, we can overwrite DART descriptors with our own crafted values, thus introducing new mappings into IO-Space. However, a single question remains -- which physical address should we map into IO-Space?

After all, we still haven’t dealt with the issue of KASLR. As the kernel’s loading addresses, both physical and virtual, are “slid” using a randomised value, we cannot locate physical addresses within the kernel until we uncover the slide’s value. If we cannot reliably locate the kernel’s base address, which physical addresses can we find?

To get around this limitation, we’ll use one more trick! While the host’s physical address space houses the DRAM, in which the kernel and application memory are stored, additional regions of physically addressable content can also be found in the PAS. For instance, hardware registers are mapped into fixed physical addresses, allowing the host to interact with peripherals on the SoC. Among these peripherals is DART itself!

As we’ve previously seen, DART’s translation process is initiated using four “L0 descriptors”. These descriptors are fed into DART’s hardware registers, denoting the base addresses of the translation tables from which the IO-Space translation process begins. If we were to map in DART’s hardware registers into IO-Space, we could either read the descriptors, thus allowing us to locate DART’s translation tables within the physical address space!

It should be noted that although DART’s hardware registers are addressable within the host’s physical address space, it remains unknown why IO-Space mappings should even be allowed to include ranges beyond the DRAM’s bounds. Indeed, it stands to reason that such mappings would be prohibited by the hardware. However, as it happens, no such restriction is enforced - DART freely allows any physical range to be inserted into IO-Space.

Therefore, if we wish to map-in DART’s own hardware registers into IO-Space, all that remains is to locate the physical ranges corresponding to DART’s hardware registers! To do so, we’ll use a combined approach.

First, we’ll use our research platform to extract the DART instance, from which we can subsequently retrieve the kernel VAS pointer corresponding to DART’s hardware registers. Then, using our translation table module, we can proceed to convert the kernel virtual address to its matching physical range. After doing so, we are presented with the following result:

Great! The address is clearly not within the DRAM’s range, hinting that we’re on the right track.

To verify whether this is indeed the correct address, we’ll use a second approach. As we already noted, the device hierarchy is stored within a structure called the “device tree”. Different properties relating to each peripheral, include the addresses of their corresponding hardware registers, are stored as nodes within this tree.

The device tree itself is present in a binary format within the firmware image (encapsulated in an IMG4 container). After extracting the device tree, we are presented with a blob storing the device hierarchy. Although the tree’s format is undocumented, inspecting the binary reveals an extremely simple structure; a fixed header denoting the number of children and entries contained in each node, followed by a fixed-length name, and a variable-length value. I later discovered that Jonathan Levin has similarly reversed this structure, and has written a tool to parse out its contents (albeit for an IMG3 container) -- you can check out his script here.

Regardless, after writing our own python script to parse the device tree, we are presented with the following result:

Ah-ha! We once again find the same physical address, thus concluding that our analysis of DART’s hardware registers is correct.

Putting it all together, we can now utilise our exploit primitive to map the physical address containing DART’s registers into IO-Space. Once mapped, we can proceed to read the hardware registers’ values, including the L0 descriptors. It should be noted that attempting to access the hardware registers from the host requires strict 32-bit load and store operations -- attempting a 64-bit load from the hardware registers results in a garbled value being returned. Curiously, however, DMA-ing to and from the hardware registers from the Wi-Fi chip goes unhindered!

Using the L0 descriptor, we can now extract the physical address of the next translation table in DART’s hierarchy. Then, by repeating the exploit primitive and mapping-in the newly discovered physical address into IO-Space, we can repeat the process, descending down DART’s translation hierarchy until we reach a DART L2 translation table. Thus, using one flow ring, we can bring them all, and in IO-Space bind them.

Once an L2 translation table is located within the physical address space, we can proceed to map it into IO-Space using our exploit primitive one last time, thus inserting DART’s own translation table into IO-Space!

By mapping DART’s translation table into its own IO-Space ranges, we can now utilise DMA access from the Wi-Fi chip in order to freely introduce new mappings into IO-Space (removing the need for the exploit primitive). Thus, gaining full control over the host’s physical memory!

Furthermore, as DART’s translation entries are never cleared, we are guaranteed that once the malicious IO-Space entries are inserted, they remain accessible to the Wi-Fi chip, until the device itself reboots. As such, the exploit process need only occur once in order to introduce a backdoor allowing the Wi-Fi chip to freely access the host’s physical memory.

One curiosity of note is that DART’s has a rather large TLB. Therefore, changes in IO-Space may not immediately be reflected until the entries are evicted from the cache. Nonetheless, this is easily dealt with by mapping in IO-Space addresses in a circular pattern, thus allowing stale entries to get cleared.

Finding The KASLR Slide

At long last, we have complete control over the entire physical address space, directly from the Wi-Fi chip. Consequently, we can proceed to map and and modify any physical address we desire, even those corresponding to the kernel’s data structures.

While this form of access is sufficient in order to subvert the kernel, there’s one tiny snag we have yet to deal with: KASLR. Since the kernel’s physical base address is randomised using the KASLR slide, and we have yet to deduce its value, we might have to resort to scanning the DRAM’s physical address ranges until we locate the kernel itself.

This approach is rather inefficient. Instead, we can opt for a more elegant path. Recall that, as we’ve just seen, hardware registers may be freely mapped into IO-Space. As hardware registers are not affected by the KASLR slide (indeed they are mapped at fixed physical addresses), they can be trivially located regardless of the current “slide” value.

Perhaps one of the hardware registers can be used as an oracle to deduce the KASLR slide?

Recall that newer devices, such as the iPhone 7, enforce the integrity of the kernel using a hardware mechanism dubbed “KTRR”. Simply put, this mechanism allows the device to provide “lockdown” regions, to which subsequent modifications are prohibited. These regions are programmed using a special set of hardware registers.

Amusingly, this very same mechanism can be used to deduce the KASLR slide!

By mapping in physical addresses corresponding to the aforementioned hardware registers, we can proceed to read their contents directly from IO-Space. This, in turn, reveals the physical ranges encoded in the “lockdown registers”, which store non other than the kernel’s base address.

The Exploit

Summing up all of the above, we’ve finally written an exploit, allowing full control over the device’s physical memory over-the-air, using Wi-Fi communication alone. You can find the exploit here.

It should be noted that several smaller details have been omitted from the blog post, in the interest of (some) brevity. For instance, locating the offset between the newly allocated DART translation table and the flow ring requires a process of probing various IO-Space addresses, while also guaranteeing that alignment constraints enforced by the granularity of ring item sizes are met. We encourage researchers to read the exploit’s code in order to discover any such omitted parts.

The exploit has been tested against the iPhone 7 running iOS 10.2 (14C92). The vulnerabilities are present in versions of iOS up to (and including) iOS 10.3.3. Researchers wishing to utilise the exploit on different iDevices or different versions, would be required to adjust the symbols used by the exploit.

Upon successful execution, the exploit exposes APIs to read and write the host’s physical memory directly over-the-air, by mapping in any requested address to the controlled DART L2 translation table, and issuing DMA accesses to the corresponding mapped IO-Space addresses.

For convenience sake, the exploit also locates the kernel’s physical base address using the method we described above (using the KTRR read-only region registers), thus allowing researchers to easily explore the kernel’s physical memory ranges.

Afterword

Over the course of this series of blog posts, we’ve explored the security of the Wi-Fi stack on Apple devices. Consequently, we constructed a complete exploit chain, allowing attackers to reliably gain control over the iOS kernel on an iPhone 7 using Wi-Fi communication alone.

During our research, we explored several components, including Broadcom’s Wi-Fi firmware, the DART IOMMU, and Apple’s Wi-Fi drivers. Each of the aforementioned components is proprietary, thus requiring substantial effort to gain visibility into their operations. We hope that by providing the tools used to conduct our research, additional exploration of these surfaces will be performed in the future, allowing for their corresponding security postures to be enhanced.

We’ve also seen how the iPhone utilises hardware security mechanisms, such as DART, in order to provide isolation between the host and potentially malicious components. These mechanisms significantly raise the bar for launching successful attacks targeting the host. Nonetheless, additional research into DART is needed in order to explore all facets of its implementation. For instance, while we’ve explored the enacted IO-Space through the prism of the Wi-Fi chip, additional PCIe components exist on the SoC, which are similarly guarded by DARTs. These components remain, as of yet, unexplored.

Apart from fixing individual vulnerabilities in the security boundaries between the host and the Wi-Fi chip, several structural enhancements can be applied to make future exploitation harder. This includes introducing read-only mappings to DART (if they are not already present), clearing unused descriptors from DART’s translation tables upon rebooting the associated component, and preventing IO-Space mappings from exposing physical ranges beyond the DRAM.

Lastly, while memory isolation goes a long way towards defending the host against a rogue Wi-Fi chip, the host must still consider all communications originating from the Wi-Fi chip as potentially malicious. To this end, the numerous communication channels between the two endpoints (including event packets, “ioctls”, and control commands), must be designed to withstand malformed data transmitted by the chip.

Ah-ha! The iPhone 7’s DRAM is based at 0x800000000 -- an address beyond a 32-bit range.

Ah-ha! The iPhone 7’s DRAM is based at 0x800000000 -- an address beyond a 32-bit range.

I could imagine what a moral satisfaction one gets when after such a hard time reversing this complex software and hardware to finally see "Gained full R/W to physical memory!" :) Great work!

ReplyDeleteComment thread on HN: https://news.ycombinator.com/item?id=15460785

ReplyDeleteYou mention that older versions of the iPhone employ a USB versus PCIe interface with its WiFi hardware. Does this exploit also work for the USB version, or does USB isolate the WiFi hardware enough to prevent a full takeover? Moreover, would the backdoor installation exploit mentioned in part two of your series apply to older iPhone hardware as well, or simply more recent versions?

ReplyDeleteThis exploit relies on mechanisms specific to PCIe. Some of the more high-level vulnerabilities we're discovered (e.g., in the ioctls and "event packets") are not interface-related, and so might be present in older devices -- I haven't done research on those devices, so I don't know for sure. Regardless, those issues would be harder to reliably exploit, as their primitives are quite weak.

DeleteUnderstood, and thank you for the clarification. How would you recommend iPhone users, of both recent and legacy (USB) devices handle these vulnerabilities?

DeleteThe vulnerabilities have been addressed by Apple in iOS 11, so updating supported devices is sufficient. As I didn't research older devices, I don't know whether they are similarly affected, so unfortunately I can't advise you there.

Deletethis could simply be avoided if the wifi chip had its own ram instead of sharing it - why dont companies do this

ReplyDeleteThe Wi-Fi SoC does have its own RAM, but it has to communicate data to the host via some interface. This is done by sharing "windows" of the host's RAM (as is the norm with PCIe devices).

DeleteOne of the ways to prevent these issues from occurring in the future is to introduce read-only mappings into DART -- preventing race conditions on shared resources with the host.

this could have be avoided if the wifi chip had its own ram instead of sharing it

ReplyDeleteThanks for the excellent article!

ReplyDeleteWhich tools are you using to generate the illustrations? I like them. Very clear.

Thank you for the kind words. I mainly use GIMP and draw.io, and sometimes just shell escape characters for pretty shell colours.

DeleteLate comment: this is utterly brilliant. Thank you

ReplyDeleteThis comment has been removed by the author.

ReplyDelete