Posted by Seth Jenkins, Project Zero

For a fair amount of time, null-deref bugs were a highly exploitable kernel bug class. Back when the kernel was able to access userland memory without restriction, and userland programs were still able to map the zero page, there were many easy techniques for exploiting null-deref bugs. However with the introduction of modern exploit mitigations such as SMEP and SMAP, as well as mmap_min_addr preventing unprivileged programs from mmap’ing low addresses, null-deref bugs are generally not considered a security issue in modern kernel versions. This blog post provides an exploit technique demonstrating that treating these bugs as universally innocuous often leads to faulty evaluations of their relevance to security.

Kernel oops overview

At present, when the Linux kernel triggers a null-deref from within a process context, it generates an oops, which is distinct from a kernel panic. A panic occurs when the kernel determines that there is no safe way to continue execution, and that therefore all execution must cease. However, the kernel does not stop all execution during an oops - instead the kernel tries to recover as best as it can and continue execution. In the case of a task, that involves throwing out the existing kernel stack and going directly to make_task_dead which calls do_exit. The kernel will also publish in dmesg a “crash” log and kernel backtrace depicting what state the kernel was in when the oops occurred. This may seem like an odd choice to make when memory corruption has clearly occurred - however the intention is to allow kernel bugs to more easily be detectable and loggable under the philosophy that a working system is much easier to debug than a dead one.

The unfortunate side effect of the oops recovery path is that the kernel is not able to perform any associated cleanup that it would normally perform on a typical syscall error recovery path. This means that any locks that were locked at the moment of the oops stay locked, any refcounts remain taken, any memory otherwise temporarily allocated remains allocated, etc. However, the process that generated the oops, its associated kernel stack, task struct and derivative members etc. can and often will be freed, meaning that depending on the precise circumstances of the oops, it’s possible that no memory is actually leaked. This becomes particularly important in regards to exploitation later.

Reference count mismanagement overview

Refcount mismanagement is a fairly well-known and exploitable issue. In the case where software improperly decrements a refcount, this can lead to a classic UAF primitive. The case where software improperly doesn’t decrement a refcount (leaking a reference) is also often exploitable. If the attacker can cause a refcount to be repeatedly improperly incremented, it is possible that given enough effort the refcount may overflow, at which point the software no longer has any remotely sensible idea of how many refcounts are taken on an object. In such a case, it is possible for an attacker to destroy the object by incrementing and decrementing the refcount back to zero after overflowing, while still holding reachable references to the associated memory. 32-bit refcounts are particularly vulnerable to this sort of overflow. It is important however, that each increment of the refcount allocates little or no physical memory. Even a single byte allocation is quite expensive if it must be performed 232 times.

Example null-deref bug

When a kernel oops unceremoniously ends a task, any refcounts that the task was holding remain held, even though all memory associated with the task may be freed when the task exits. Let’s look at an example - an otherwise unrelated bug I coincidentally discovered in the very recent past:

|

static int show_smaps_rollup(struct seq_file *m, void *v) { struct proc_maps_private *priv = m->private; struct mem_size_stats mss; struct mm_struct *mm; struct vm_area_struct *vma; unsigned long last_vma_end = 0; int ret = 0;

priv->task = get_proc_task(priv->inode); //task reference taken if (!priv->task) return -ESRCH;

mm = priv->mm; //With no vma's, mm->mmap is NULL if (!mm || !mmget_not_zero(mm)) { //mm reference taken ret = -ESRCH; goto out_put_task; }

memset(&mss, 0, sizeof(mss));

ret = mmap_read_lock_killable(mm); //mmap read lock taken if (ret) goto out_put_mm;

hold_task_mempolicy(priv);

for (vma = priv->mm->mmap; vma; vma = vma->vm_next) { smap_gather_stats(vma, &mss); last_vma_end = vma->vm_end; }

show_vma_header_prefix(m, priv->mm->mmap->vm_start,last_vma_end, 0, 0, 0, 0); //the deref of mmap causes a kernel oops here seq_pad(m, ' '); seq_puts(m, "[rollup]\n");

__show_smap(m, &mss, true);

release_task_mempolicy(priv); mmap_read_unlock(mm);

out_put_mm: mmput(mm); out_put_task: put_task_struct(priv->task); priv->task = NULL;

return ret; } |

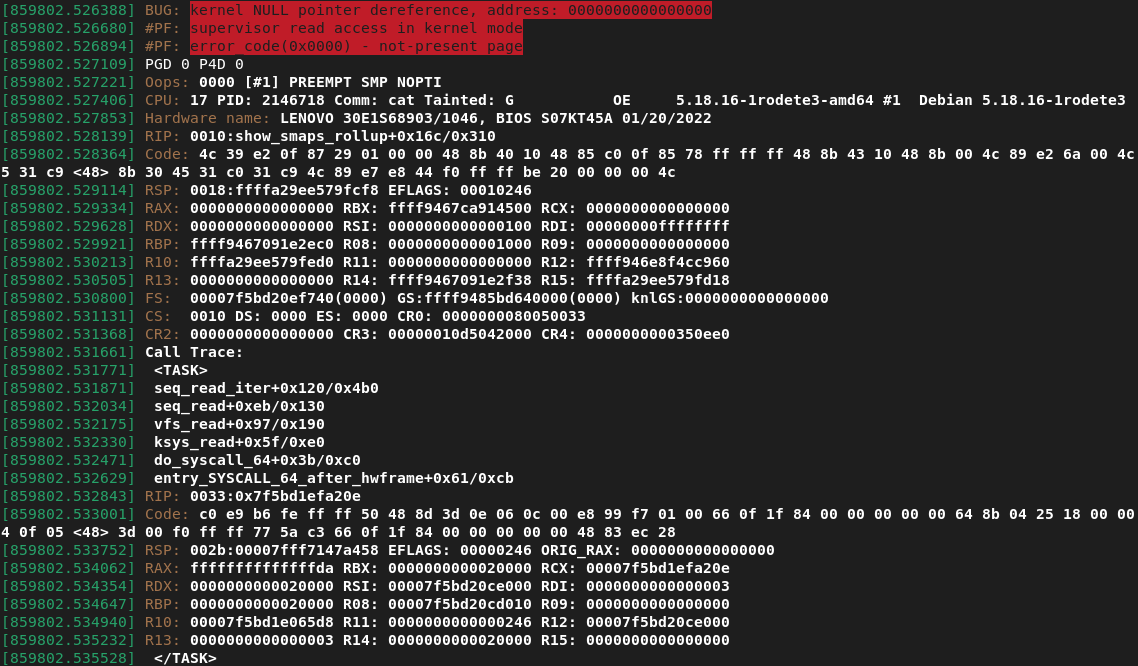

This file is intended simply to print a set of memory usage statistics for the respective process. Regardless, this bug report reveals a classic and otherwise innocuous null-deref bug within this function. In the case of a task that has no VMA’s mapped at all, the task’s mm_struct mmap member will be equal to NULL. Thus the priv->mm->mmap->vm_start access causes a null dereference and consequently a kernel oops. This bug can be triggered by simply read’ing /proc/[pid]/smaps_rollup on a task with no VMA’s (which itself can be stably created via ptrace):

This kernel oops will mean that the following events occur:

- The associated struct file will have a refcount leaked if fdget took a refcount (we’ll try and make sure this doesn’t happen later)

- The associated seq_file within the struct file has a mutex that will forever be locked (any future reads/writes/lseeks etc. will hang forever).

- The task struct associated with the smaps_rollup file will have a refcount leaked

- The mm_struct’s mm_users refcount associated with the task will be leaked

- The mm_struct’s mmap lock will be permanently readlocked (any future write-lock attempts will hang forever)

Each of these conditions is an unintentional side-effect that leads to buggy behaviors, but not all of those behaviors are useful to an attacker. The permanent locking of events 2 and 5 only makes exploitation more difficult. Condition 1 is unexploitable because we cannot leak the struct file refcount again without taking a mutex that will never be unlocked. Condition 3 is unexploitable because a task struct uses a safe saturating kernel refcount_t which prevents the overflow condition. This leaves condition 4.

The mm_users refcount still uses an overflow-unsafe atomic_t and since we can take a readlock an indefinite number of times, the associated mmap_read_lock does not prevent us from incrementing the refcount again. There are a couple important roadblocks we need to avoid in order to repeatedly leak this refcount:

- We cannot call this syscall from the task with the empty vma list itself - in other words, we can’t call read from /proc/self/smaps_rollup. Such a process cannot easily make repeated syscalls since it has no virtual memory mapped. We avoid this by reading smaps_rollup from another process.

- We must re-open the smaps_rollup file every time because any future reads we perform on a smaps_rollup instance we already triggered the oops on will deadlock on the local seq_file mutex lock which is locked forever. We also need to destroy the resulting struct file (via close) after we generate the oops in order to prevent untenable memory usage.

- If we access the mm through the same pid every time, we will run into the task struct max refcount before we overflow the mm_users refcount. Thus we need to create two separate tasks that share the same mm and balance the oopses we generate across both tasks so the task refcounts grow half as quickly as the mm_users refcount. We do this via the clone flag CLONE_VM

- We must avoid opening/reading the smaps_rollup file from a task that has a shared file descriptor table, as otherwise a refcount will be leaked on the struct file itself. This isn’t difficult, just don’t read the file from a multi-threaded process.

Our final refcount leaking overflow strategy is as follows:

- Process A forks a process B

- Process B issues PTRACE_TRACEME so that when it segfaults upon return from munmap it won’t go away (but rather will enter tracing stop)

- Proces B clones with CLONE_VM | CLONE_PTRACE another process C

- Process B munmap’s its entire virtual memory address space - this also unmaps process C’s virtual memory address space.

- Process A forks new children D and E which will access (B|C)’s smaps_rollup file respectively

- (D|E) opens (B|C)’s smaps_rollup file and performs a read which will oops, causing (D|E) to die. mm_users will be refcount leaked/incremented once per oops

- Process A goes back to step 5 ~232 times

The above strategy can be rearchitected to run in parallel (across processes not threads, because of roadblock 4) and improve performance. On server setups that print kernel logging to a serial console, generating 232 kernel oopses takes over 2 years. However on a vanilla Kali Linux box using a graphical interface, a demonstrative proof-of-concept takes only about 8 days to complete! At the completion of execution, the mm_users refcount will have overflowed and be set to zero, even though this mm is currently in use by multiple processes and can still be referenced via the proc filesystem.

Exploitation

Once the mm_users refcount has been set to zero, triggering undefined behavior and memory corruption should be fairly easy. By triggering an mmget and an mmput (which we can very easily do by opening the smaps_rollup file once more) we should be able to free the entire mm and cause a UAF condition:

|

static inline void __mmput(struct mm_struct *mm) { VM_BUG_ON(atomic_read(&mm->mm_users));

uprobe_clear_state(mm); exit_aio(mm); ksm_exit(mm); khugepaged_exit(mm); exit_mmap(mm); mm_put_huge_zero_page(mm); set_mm_exe_file(mm, NULL); if (!list_empty(&mm->mmlist)) { spin_lock(&mmlist_lock); list_del(&mm->mmlist); spin_unlock(&mmlist_lock); } if (mm->binfmt) module_put(mm->binfmt->module); lru_gen_del_mm(mm); mmdrop(mm); } |

Unfortunately, since 64591e8605 (“mm: protect free_pgtables with mmap_lock write lock in exit_mmap”), exit_mmap unconditionally takes the mmap lock in write mode. Since this mm’s mmap_lock is permanently readlocked many times, any calls to __mmput will manifest as a permanent deadlock inside of exit_mmap.

However, before the call permanently deadlocks, it will call several other functions:

- uprobe_clear_state

- exit_aio

- ksm_exit

- khugepaged_exit

Additionally, we can call __mmput on this mm from several tasks simultaneously by having each of them trigger an mmget/mmput on the mm, generating irregular race conditions. Under normal execution, it should not be possible to trigger multiple __mmput’s on the same mm (much less concurrent ones) as __mmput should only be called on the last and only refcount decrement which sets the refcount to zero. However, after the refcount overflow, all mmget/mmput’s on the still-referenced mm will trigger an __mmput. This is because each mmput that decrements the refcount to zero (despite the corresponding mmget being why the refcount was above zero in the first place) believes that it is solely responsible for freeing the associated mm.

This racy __mmput primitive extends to its callees as well. exit_aio is a good candidate for taking advantage of this:

|

void exit_aio(struct mm_struct *mm) { struct kioctx_table *table = rcu_dereference_raw(mm->ioctx_table); struct ctx_rq_wait wait; int i, skipped;

if (!table) return;

atomic_set(&wait.count, table->nr); init_completion(&wait.comp);

skipped = 0; for (i = 0; i < table->nr; ++i) { struct kioctx *ctx = rcu_dereference_protected(table->table[i], true);

if (!ctx) { skipped++; continue; } ctx->mmap_size = 0; kill_ioctx(mm, ctx, &wait); }

if (!atomic_sub_and_test(skipped, &wait.count)) { /* Wait until all IO for the context are done. */ wait_for_completion(&wait.comp); }

RCU_INIT_POINTER(mm->ioctx_table, NULL); kfree(table); } |

While the callee function kill_ioctx is written in such a way to prevent concurrent execution from causing memory corruption (part of the contract of aio allows for kill_ioctx to be called in a concurrent way), exit_aio itself makes no such guarantees. Two concurrent calls of exit_aio on the same mm struct can consequently induce a double free of the mm->ioctx_table object, which is fetched at the beginning of the function, while only being freed at the very end. This race window can be widened substantially by creating many aio contexts in order to slow down exit_aio’s internal context freeing loop. Successful exploitation will trigger the following kernel BUG indicating that a double free has occurred:

Note that as this exit_aio path is hit from __mmput, triggering this race will produce at least two permanently deadlocked processes when those processes later try to take the mmap write lock. However, from an exploitation perspective, this is irrelevant as the memory corruption primitive has already occurred before the deadlock occurs. Exploiting the resultant primitive would probably involve racing a reclaiming allocation in between the two frees of the mm->ioctx_table object, then taking advantage of the resulting UAF condition of the reclaimed allocation. It is undoubtedly possible, although I didn’t take this all the way to a completed PoC.

Conclusion

While the null-dereference bug itself was fixed in October 2022, the more important fix was the introduction of an oops limit which causes the kernel to panic if too many oopses occur. While this patch is already upstream, it is important that distributed kernels also inherit this oops limit and backport it to LTS releases if we want to avoid treating such null-dereference bugs as full-fledged security issues in the future. Even in that best-case scenario, it is nevertheless highly beneficial for security researchers to carefully evaluate the side-effects of bugs discovered in the future that are similarly “harmless” and ensure that the abrupt halt of kernel code execution caused by a kernel oops does not lead to other security-relevant primitives.

No comments:

Post a Comment